Architecture

Virtual clusters are fully functional Kubernetes clusters, but how you deploy the control plane and worker nodes defines the tenancy model for the virtual cluster.

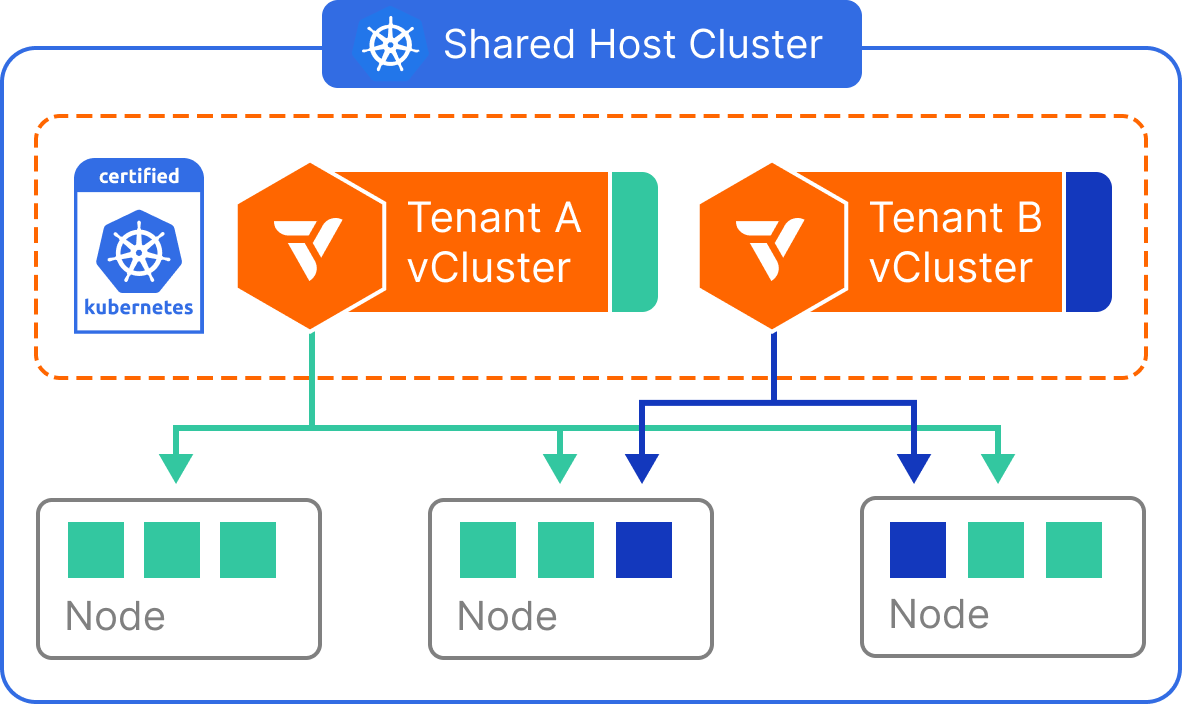

Shared Nodes

The shared nodes tenancy model allows multiple virtual clusters to run workloads on the same physical Kubernetes nodes. This configuration is ideal for scenarios where maximizing resource utilization is a top priority—especially for internal developer environments, CI/CD pipelines, and cost-sensitive use cases.

Each virtual cluster has its own isolated control plane, API server, and CRDs, but workloads are scheduled without node-level isolation. This setup helps platform teams deliver the benefits of vCluster (like per-tenant customization and faster provisioning) while minimizing infrastructure costs by sharing underlying compute across all tenants.

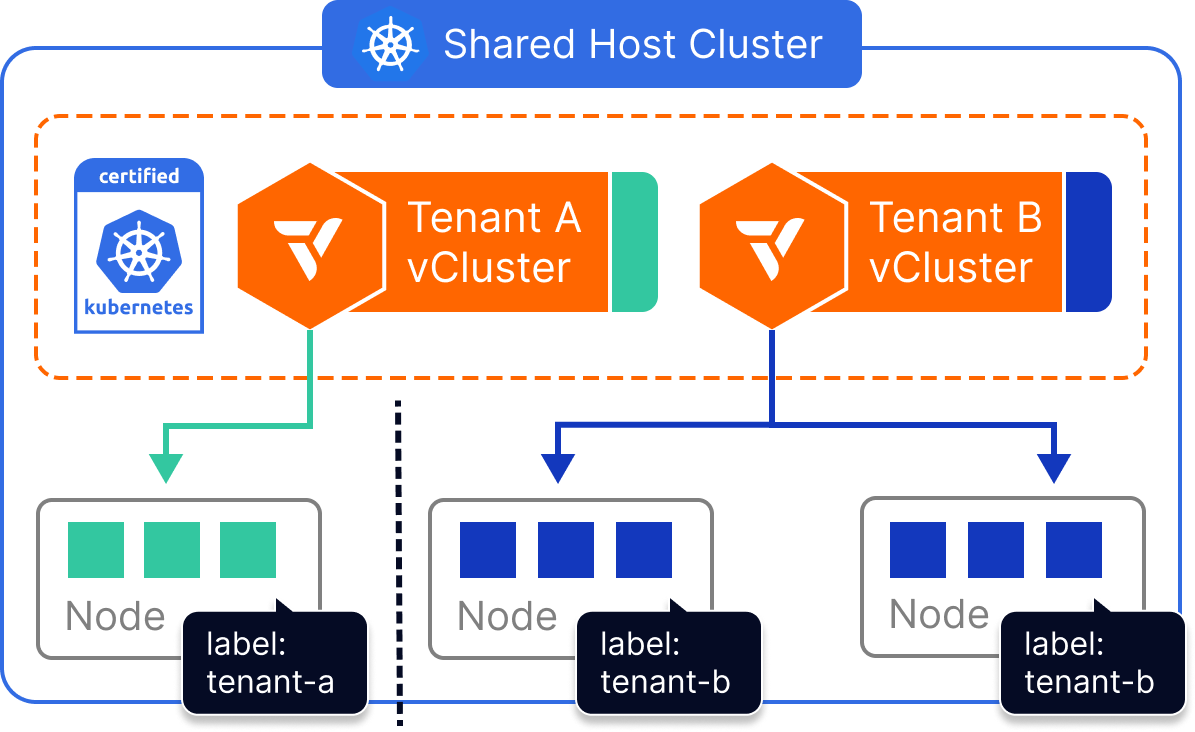

Dedicated Nodes

Dedicated Nodes allow platform teams to give each virtual cluster exclusive access to a set of physical nodes—without having to provision entirely separate clusters. By combining vCluster’s multi-tenant architecture with Kubernetes node selectors, workloads from each virtual cluster can be scoped to a specific group of labeled nodes, ensuring compute separation across tenants.

This approach enables strong operational boundaries and predictable performance, while maintaining all the benefits of shared infrastructure. It’s especially effective for teams who want dedicated compute for certain tenants, environments, or workloads—without duplicating every part of the platform stack.

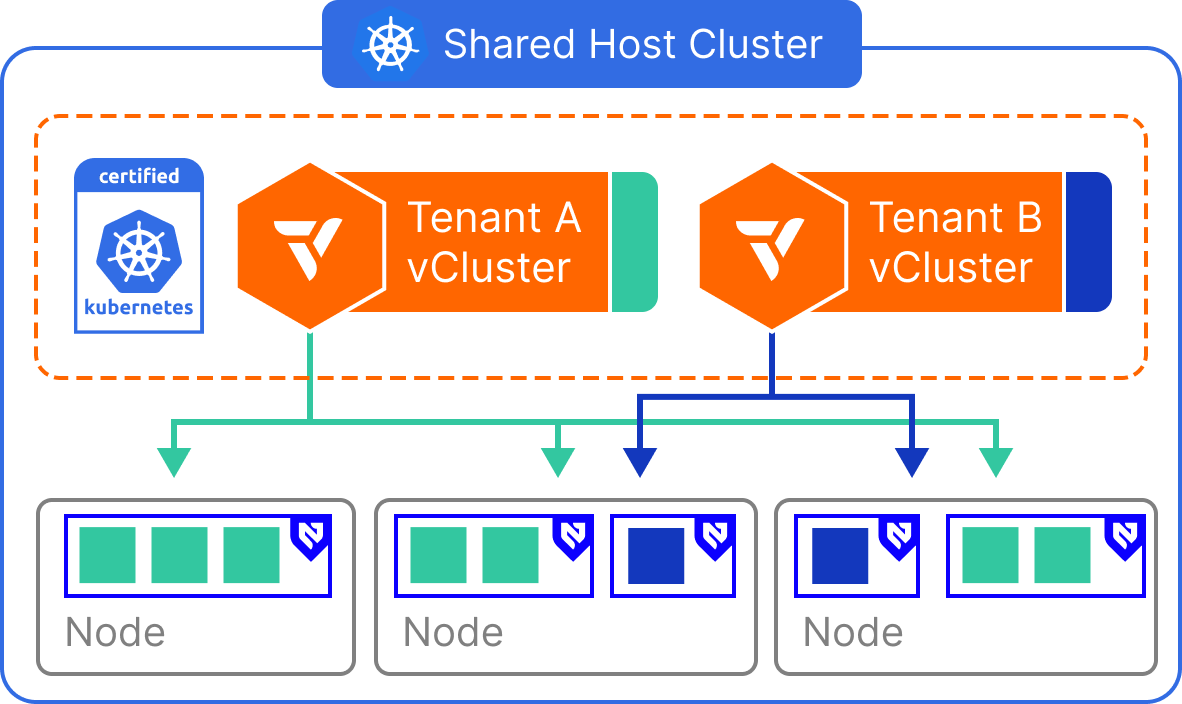

Virtual Nodes

Virtualizes node boundaries for enhanced security and separation inside a shared cluster. Virtual Nodes provide an effective way to isolate tenant workloads without allocating dedicated physical nodes per tenant. This model leverages virtualization at the node level—through vNode—to create strong scheduling boundaries while continuing to share the underlying infrastructure. Each virtual cluster receives its own control plane and interacts with a virtualized view of the node environment. Workloads are scheduled into tenant-scoped virtual nodes, which are translated into actual pods on the shared cluster. This allows teams to achieve node-level isolation semantics, including taints and tolerations, without managing separate node pools.

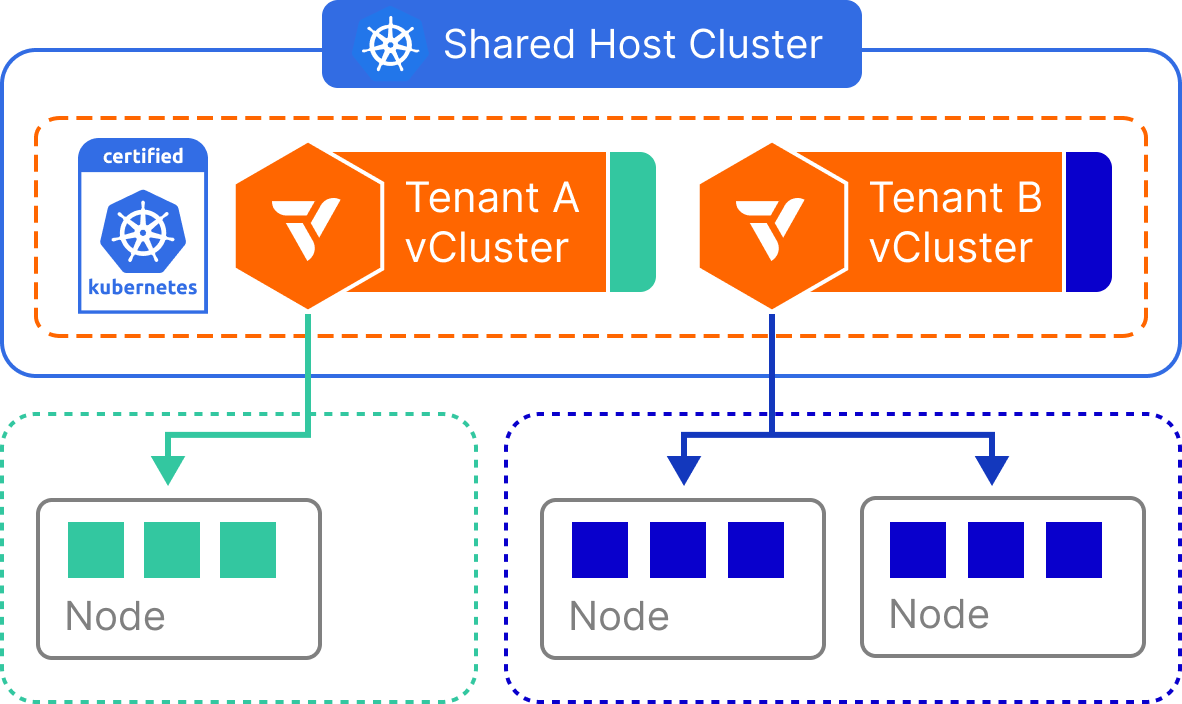

Private Nodes

Private Nodes provide the strongest isolation among vCluster tenancy models. In this setup, each virtual cluster runs inside its own dedicated Kubernetes host cluster—backed by physically separate nodes, a separate control plane, and separate infrastructure components like CNI and CSI drivers. From the tenant’s perspective, the environment behaves like a single-tenant Kubernetes cluster, with all platform services fully isolated.

This approach ensures that no tenant shares compute, networking, or storage with others. It’s best suited for highly sensitive workloads that require strict compliance, regulatory boundaries, or strong guarantees around performance, network isolation, and tenant autonomy.

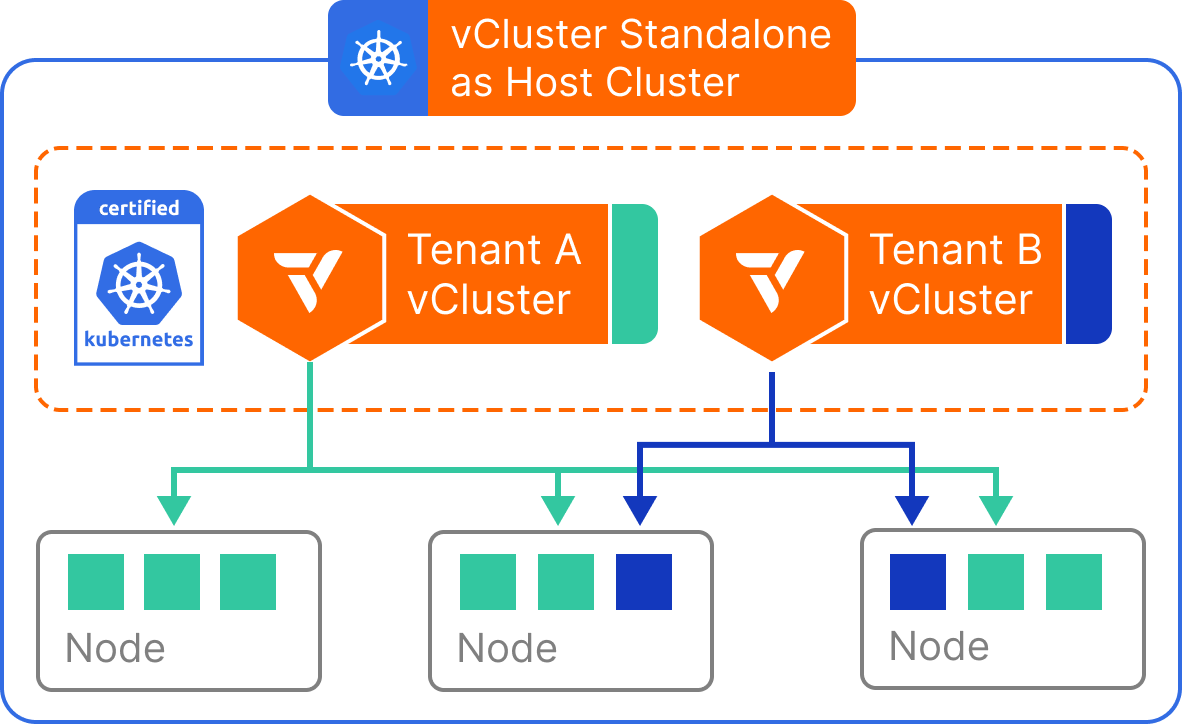

vCluster Standalone

vCluster Standalone eliminates the need for a pre-existing Kubernetes host cluster. Instead, the virtual cluster runs as a fully self-contained process—typically inside a single container or VM—capable of bootstrapping its own control plane and simulating the Kubernetes environment. This model is ideal for use cases where speed, portability, or independence are paramount. Whether you’re running workloads in CI pipelines, demos, local development setups, or air-gapped environments, vCluster Standalone offers a fast, lightweight way to provision isolated Kubernetes environments without relying on cluster-level infrastructure or orchestration.

vCluster components for host nodes

This feature is only available for the following:

Virtual control plane as a container

vCluster creates isolated Kubernetes environments by deploying a virtual cluster that contains its own dedicated virtual control plane as a container. This control plane orchestrates operations within the virtual cluster and facilitates interaction with the underlying host cluster.

The virtual control plane is deployed as a single pod managed as a StatefulSet (default) or Deployment that contains the following components:

- A Kubernetes API server, which is the management interface for all API requests within the virtual cluster.

- A controller manager, which maintains the state of Kubernetes resources like pods, ensuring they match the desired configurations.

- A data store, which is a connection to or mount of the data store where the API stores all resources. By default, an embedded SQLite is used as the datastore, but you can choose other data stores like etcd, MySQL, and PostgreSQL.

- A syncer, which is a component that synchronizes resources between the virtual cluster and host cluster and facilitates workload management on the host's infrastructure.

- A scheduler, which is an optional component that schedules workloads. By default, vCluster reuses the host cluster scheduler to save on computing resources. If you need to add node labels or taints to control scheduling, drain nodes, or utilize custom schedulers, you can enable the virtual scheduler.

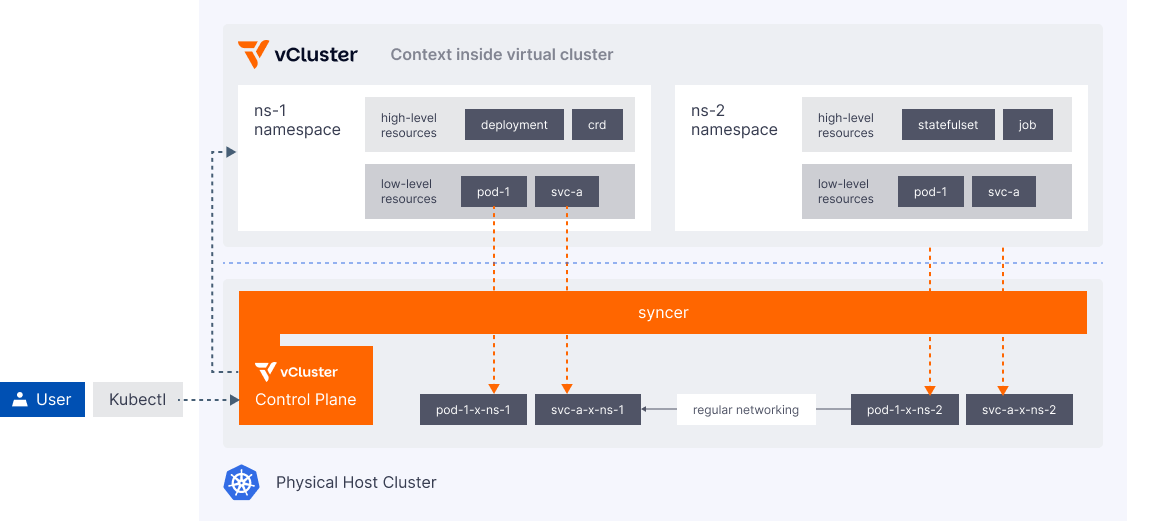

Syncer

When a virtual cluster doesn't have actual worker nodes or a network, it uses a syncer component to synchronize the virtual cluster pods to the underlying host cluster. The host cluster schedules the pod, and the syncer keeps the virtual cluster pod and host cluster pod in sync.

Higher-level Kubernetes resources, such as Deployment, StatefulSet, and CRDs only exist inside the virtual cluster and never reach the API server or data store of the host cluster.

By default, the syncer component synchronizes certain low-level virtual cluster Pod resources, such as ConfigMap and Secret, to the host namespace so that the host cluster scheduler can schedule these pods with access to these resources.

While primarily focused on syncing from the virtual cluster to the host cluster, the syncer also propagates certain changes made in the host cluster back into the virtual cluster. This bi-directional syncing ensures that the virtual cluster remains up-to-date with the underlying infrastructure it depends on.

You can read more about how the syncer works in more detail and learn how to sync resources to and from the host cluster depending on your use case.

Host cluster and namespace

Each virtual cluster runs as a regular StatefulSet (default) or Deployment inside a host cluster namespace. Everything that you create inside the virtual cluster lives either inside the virtual cluster itself and/or inside the host namespace.

A host namespace can only contain a single virtual cluster. However, it is possible to run a virtual cluster within another virtual cluster, a concept known as vCluster nesting.

Networking in virtual clusters

Networking within virtual clusters is crucial for facilitating communication within the virtual environment itself, across different virtual clusters, and between the virtual and host clusters.

Ingress traffic

Instead of having to run a separate ingress controller in each virtual cluster to provide external access to services within the virtual cluster, vCluster can synchronize Ingress resources to utilize the host cluster's ingress controller, facilitating resource sharing across virtual clusters and easing management like configuring DNS for each virtual cluster.

DNS in virtual clusters

By default, each virtual cluster deploys its own individual DNS service, which is CoreDNS. The DNS service lets pods within the virtual cluster resolve the IP addresses of other services running in the same virtual environment. This capability is anchored by the syncer component, which maps service DNS names within the virtual cluster to their corresponding IP addresses in the host cluster, adhering to Kubernetes' DNS naming conventions.

With vCluster Pro, CoreDNS can be embedded directly into the virtual control plane pod, minimizing the overall footprint of each virtual cluster.

Communication within a virtual cluster

-

Pod to pod - Pods within a virtual cluster communicate using the host cluster's network infrastructure, with no additional configuration required. The syncer component ensures these pods maintain Kubernetes-standard networking.

-

Pod to service - Services within a virtual cluster are synchronized to allow pod communication, leveraging the virtual cluster's CoreDNS for intuitive domain name mappings, as in regular Kubernetes environments.

-

Pod to host cluster service - Services from the host cluster can be replicated into the virtual cluster, allowing pods within the virtual cluster to access host services using names that are meaningful within the virtual context.

-

Pod to another virtual cluster service - Services can also be mapped between different virtual clusters. This is achieved through DNS configurations that direct service requests from one virtual cluster to another.

Communication from the host cluster

- Host pod to virtual cluster service - Host cluster pods can access services within a virtual cluster by replicating virtual cluster services to any host cluster namespace. This enables applications running inside the virtual cluster to be accessible to other workloads on the host cluster.