Private Nodes

This feature is only available for the following:

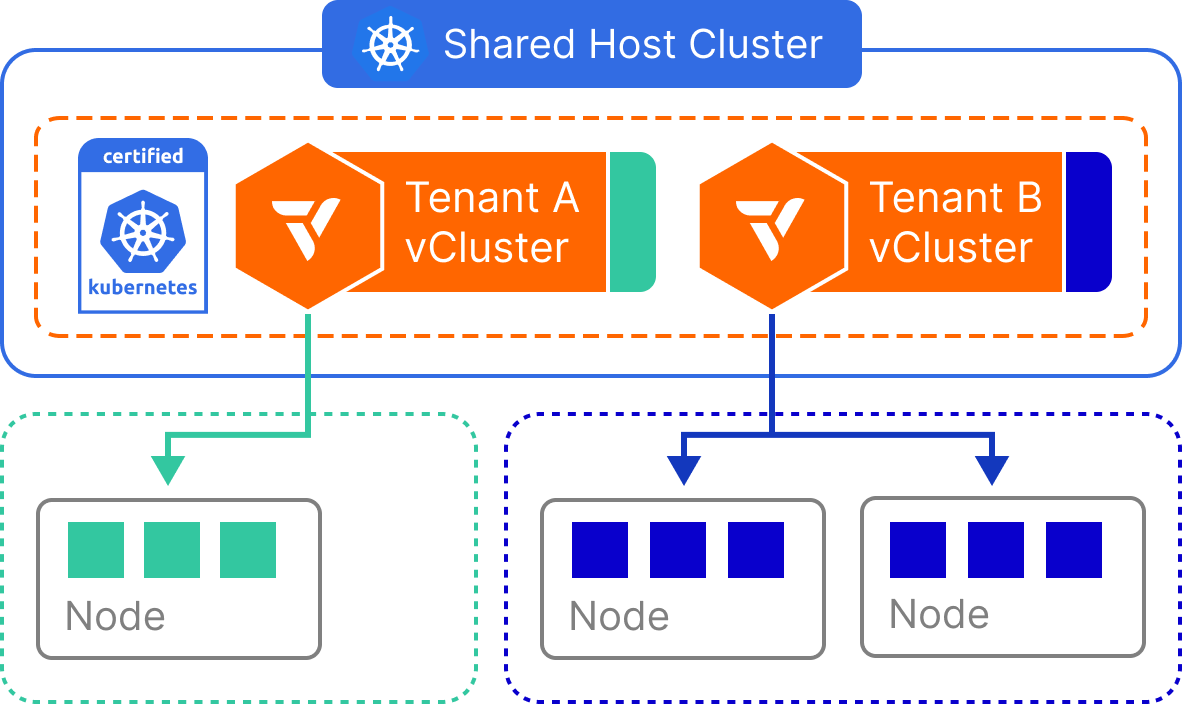

Using private nodes is a tenancy model for vCluster where, instead of sharing the host cluster's worker nodes, individual worker nodes are joined to a vCluster. These private nodes act as the vCluster's worker nodes and are treated as worker nodes for the vCluster.

Because these nodes are real Kubernetes nodes, vCluster does not sync any resources to the host cluster as no host cluster worker nodes are used. All workloads run directly on the attached nodes as if they were native to the virtual cluster.

This approach can be understood as follows: the control plane creates a virtual Kubernetes context, while the workloads can run on separate physical nodes that exist entirely within that virtual context.

One key benefit of using private nodes is that vCluster can automatically manage the lifecycle of the worker nodes.

To allow nodes to join the virtual cluster, the cluster must be exposed and accessible. One option is to expose a LoadBalancer service and use its endpoint for the worker nodes. vCluster does not need direct access to the nodes; instead, it uses Konnectivity to tunnel requests—such as kubectl logs—from the control plane to the nodes.

How private nodes can be provisioned

Private nodes can be provisioned in two different ways:

- Manually provisioned - Nodes that were provisioned outside of vCluster. These nodes are joined to vCluster using a vCluster CLI command.

- Automatically provisioned - Nodes that are provisioned on-demand based on the vCluster configuration and resource requirements. vCluster is connected to vCluster Platform and references a node provider defined in vCluster Platform.

vcluster.yaml configuration

To create a vCluster with private nodes, you'll need to enable the feature as well as set some other configuration options in preparation for worker nodes to join.

# Enable private nodes

privateNodes:

enabled: true

# vCluster control plane options

controlPlane:

distro:

k8s:

image:

tag: v1.31.2 # Kubernetes version you want to use

service:

spec:

type: LoadBalancer # If you want to expose vCluster using LoadBalancer (Recommended option)

# Networking configuration

networking:

# Specify the pod CIDR

podCIDR: 10.64.0.0/16

# Specify the service CIDR

serviceCIDR: 10.128.0.0/16

Scheduling enabled by default

When private nodes are enabled, scheduling is handled by the virtual cluster and the virtual scheduler is automatically enabled. You do not need to manually configure this in your vcluster.yaml.

# Enabling private nodes enables the virtual scheduler

# Disabling the virtual scheduler is not possible

controlPlane:

advanced:

virtualScheduler:

enabled: true

Other vCluster feature limitations

Certain vCluster features are automatically disabled or unavailable. If you include these options in your vcluster.yaml, they are ignored or might cause configuration errors.

The following features are not available:

sync.*- No resource syncing between virtual and host clustersintegrations.*- Integrations depend on syncing functionalitynetworking.replicateServices- Services are not replicated to hostcontrolPlane.distro.k3s- Only standard Kubernetes (k8s) is supportedcontrolPlane.coredns.embedded: true- Embedded CoreDNS conflicts with custom CNIcontrolPlane.advanced.virtualScheduler.enabled: false- Virtual scheduler cannot be disabledsleepMode.*- No ability to sleep workloads or control plane

# These configurations are NOT supported with private nodes

# Resource syncing between virtual and host clusters is disabled

sync:

services:

enabled: false # Services cannot be synced to host cluster

secrets:

enabled: false # Secrets cannot be synced to host cluster

# All other sync options (pods, configmaps, etc.) are also disabled

# Platform integrations require syncing functionality

integrations:

metricsServer:

enabled: false # Metrics server integration not supported

# All other integrations are disabled due to sync dependency

# Service replication to host cluster is not available

networking:

replicateServices:

enabled: false # Services run entirely within virtual cluster

# Distribution restrictions

controlPlane:

distro:

k3s:

enabled: false # k3s distribution not supported

k8s:

enabled: true # Only standard Kubernetes works

# DNS configuration limitations

coredns:

embedded: false # Embedded CoreDNS conflicts with custom CNI options

advanced:

# Virtual scheduler is required for workload placement

virtualScheduler:

enabled: true # Always enabled (cannot be disabled)

# Host Path Mapper is not supported

hostPathMapper:

enabled: false

# Sleep mode is not available

sleepMode:

enabled: false

Network policies

If you are using network policies, private nodes traffic into the virtual cluster control plane must be allowed.

privateNodes:

enabled: true

controlPlane:

service:

spec:

type: LoadBalancer

policies:

networkPolicy:

enabled: true

controlPlane:

ingress:

- from:

# Allow incoming traffic from the load balancer internal IP address.

# This example is allowing incoming traffic from any address. Load balancer internal CIDR should be used.

- ipBlock:

cidr: 0.0.0.0/0

Features available with private nodes

The following vCluster features are available when using private nodes as your tenancy model:

Create a vCluster with private nodes

Create the vCluster control plane with private nodes enabled

The vCluster control plane needs to be created with private nodes feature enabled.

Private nodes need to be enabled from the initial installation of the vCluster. An existing vCluster cannot upgrade from using the host cluster's nodes to private nodes.

# Enable private nodes

privateNodes:

enabled: true

# vCluster control plane options

controlPlane:

distro:

k8s:

image:

tag: v1.31.2 # Kubernetes version you want to use

service:

spec:

type: LoadBalancer # If you want to expose vCluster via LoadBalancer (recommended option)

# Networking configuration

networking:

# Specify the pod cidr

podCIDR: 10.64.0.0/16

# Specify the service cidr

serviceCIDR: 10.128.0.0/16

Follow this guide to create an access key and set up the vCluster for deployment using Helm or other tools.

Join worker nodes

Ensure your nodes are ready to join the vCluster, before joining each worker node.

Once a worker node has been attached to a vCluster, pods can be scheduled and start to run on these nodes.

Manage vCluster

Finally, after vCluster is up and running, manage the lifecycle of the vCluster and worker nodes.