Demystifying Karpenter on GCP: The Complete Setup Guide

.jpeg)

.jpeg)

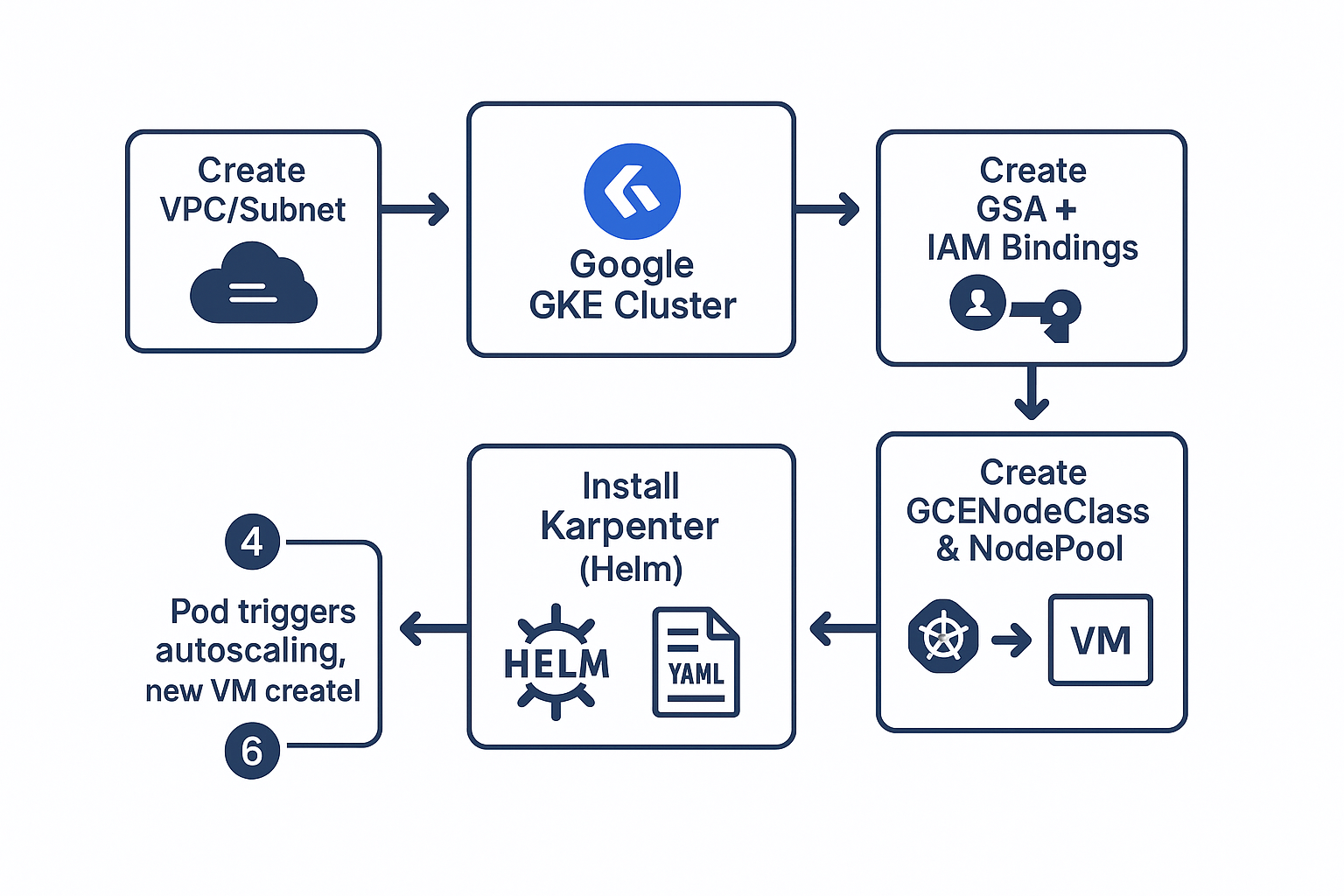

Karpenter has become the gold standard for Kubernetes autoscaling on AWS, but what about Google Cloud Platform (GCP)? If you've ever felt the pain of managing node pools, waiting for cluster autoscalers to kick in, or fell back to over-provisioning just to be safe, then this post is for you.

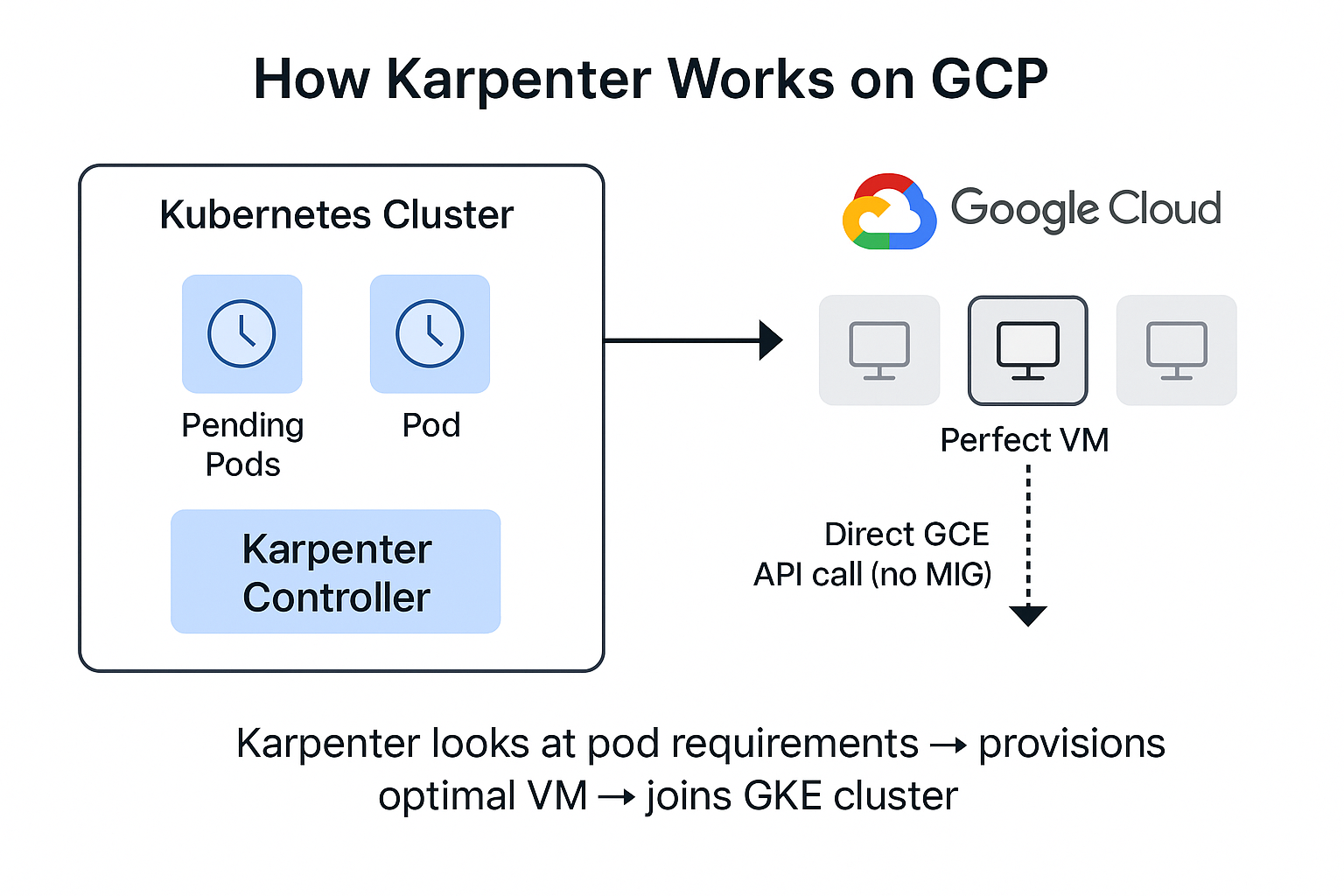

Karpenter is a "groupless" autoscaler. Unlike the traditional Kubernetes Cluster Autoscaler, which scales up by increasing the size of specific node groups (like an ASG in AWS or a MIG in GCP), Karpenter looks directly at the unschedulable pods in your cluster. It calculates the exact compute resources they need and provisions the "perfect" node to fit them in seconds.

Today, we’re going to walk through setting up the Karpenter Provider for GCP (currently in preview/alpha). We will cover everything from IAM permissions to running your first workload.

Run the following commands to create a GKE cluster required for this demo:

export PROJECT_ID=$(gcloud config get-value project)

export CLUSTER_NAME="karpenter-demo"

export REGION="us-central1"

export ZONE="us-central1-a"

export VPC_NAME="karpenter-vpc"

export SUBNET_NAME="karpenter-subnet"

Command:

gcloud services enable \

compute.googleapis.com \

container.googleapis.com \

iam.googleapis.com \

cloudresourcemanager.googleapis.com

Output:

Operation "operations/acat.p2-160206084141-e637ce69-91a8-4441-89e0-1498db95bd05" finished successfully.

Command: for VPC

echo "Creating VPC and Subnet..."

gcloud compute networks create $VPC_NAME \

--subnet-mode=custom \

--project=$PROJECT_ID

Output:

Creating VPC and Subnet...

Created [https://www.googleapis.com/compute/v1/projects/saiyam-project/global/networks/karpenter-vpc].

NAME SUBNET_MODE BGP_ROUTING_MODE IPV4_RANGE GATEWAY_IPV4

karpenter-vpc CUSTOM REGIONAL

Command: for Subnet

gcloud compute networks subnets create $SUBNET_NAME \

--network=$VPC_NAME \

--range=10.0.0.0/20 \

--region=$REGION \

--project=$PROJECT_ID

Output:

Created [https://www.googleapis.com/compute/v1/projects/saiyam-project/regions/us-central1/subnetworks/karpenter-subnet].

NAME REGION NETWORK RANGE STACK_TYPE IPV6_ACCESS_TYPE INTERNAL_IPV6_PREFIX EXTERNAL_IPV6_PREFIX

karpenter-subnet us-central1 karpenter-vpc 10.0.0.0/20 IPV4_ONLY

Command:

echo "Creating GKE Cluster..."

gcloud container clusters create $CLUSTER_NAME \

--zone=$ZONE \

--project=$PROJECT_ID \

--num-nodes=1 \

--machine-type=e2-standard-4 \

--network=$VPC_NAME \

--subnetwork=$SUBNET_NAME \

--enable-ip-alias \

--workload-pool=${PROJECT_ID}.svc.id.goog \

--release-channel=regular \

--scopes=cloud-platform

Output:

Creating GKE Cluster...

Note: The Kubelet readonly port (10255) is now deprecated. Please update your workloads to use the recommended alternatives. See https://cloud.google.com/kubernetes-engine/docs/how-to/disable-kubelet-readonly-port for ways to check usage and for migration instructions.

Creating cluster karpenter-demo in us-central1-a... Cluster is being health-checked (Kubernetes Control Plane is healthy)...done.

Created [https://container.googleapis.com/v1/projects/saiyam-project/zones/us-central1-a/clusters/karpenter-demo].

To inspect the contents of your cluster, go to: https://console.cloud.google.com/kubernetes/workload_/gcloud/us-central1-a/karpenter-demo?project=saiyam-project

kubeconfig entry generated for karpenter-demo.

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

karpenter-demo us-central1-a 1.33.5-gke.1201000 34.136.174.164 e2-standard-4 1.33.5-gke.1201000 1 RUNNING

Command:

gcloud container clusters get-credentials $CLUSTER_NAME \ --zone=$ZONE \ --project=$PROJECT_ID

Output:

Fetching cluster endpoint and auth data.

kubeconfig entry generated for karpenter-demo. kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-karpenter-demo-default-pool-8ec24245-99h5 Ready <none> 2m47s v1.33.5-gke.1201000

We need a Google Service Account (GSA) that Karpenter will use to talk to GCP APIs. We will create the account, grant it permissions, and export a key.

export KARPENTER_SA_NAME="karpenter-sa"

gcloud iam service-accounts create $KARPENTER_SA_NAME \

--description="Karpenter Controller Service Account" \

--display-name="Karpenter"

Output:

Created service account [karpenter-sa].

Karpenter needs broad permissions to manage Compute instances.

# Assign Compute Admin

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member="serviceAccount:${KARPENTER_SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \ --role="roles/compute.admin" \ --condition=None

# Assign Kubernetes Engine Admin

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member="serviceAccount:${KARPENTER_SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \ --role="roles/container.admin" \ --condition=None

# Assign Service Account User

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member="serviceAccount:${KARPENTER_SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \ --role="roles/iam.serviceAccountUser" \ --condition=None

# Allow the Kubernetes Pod (karpenter) to act as the Google Service Account

gcloud iam service-accounts add-iam-policy-binding \ "${KARPENTER_SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \ --role roles/iam.workloadIdentityUser \ --member "serviceAccount:${PROJECT_ID}.svc.id.goog[karpenter-system/karpenter]"

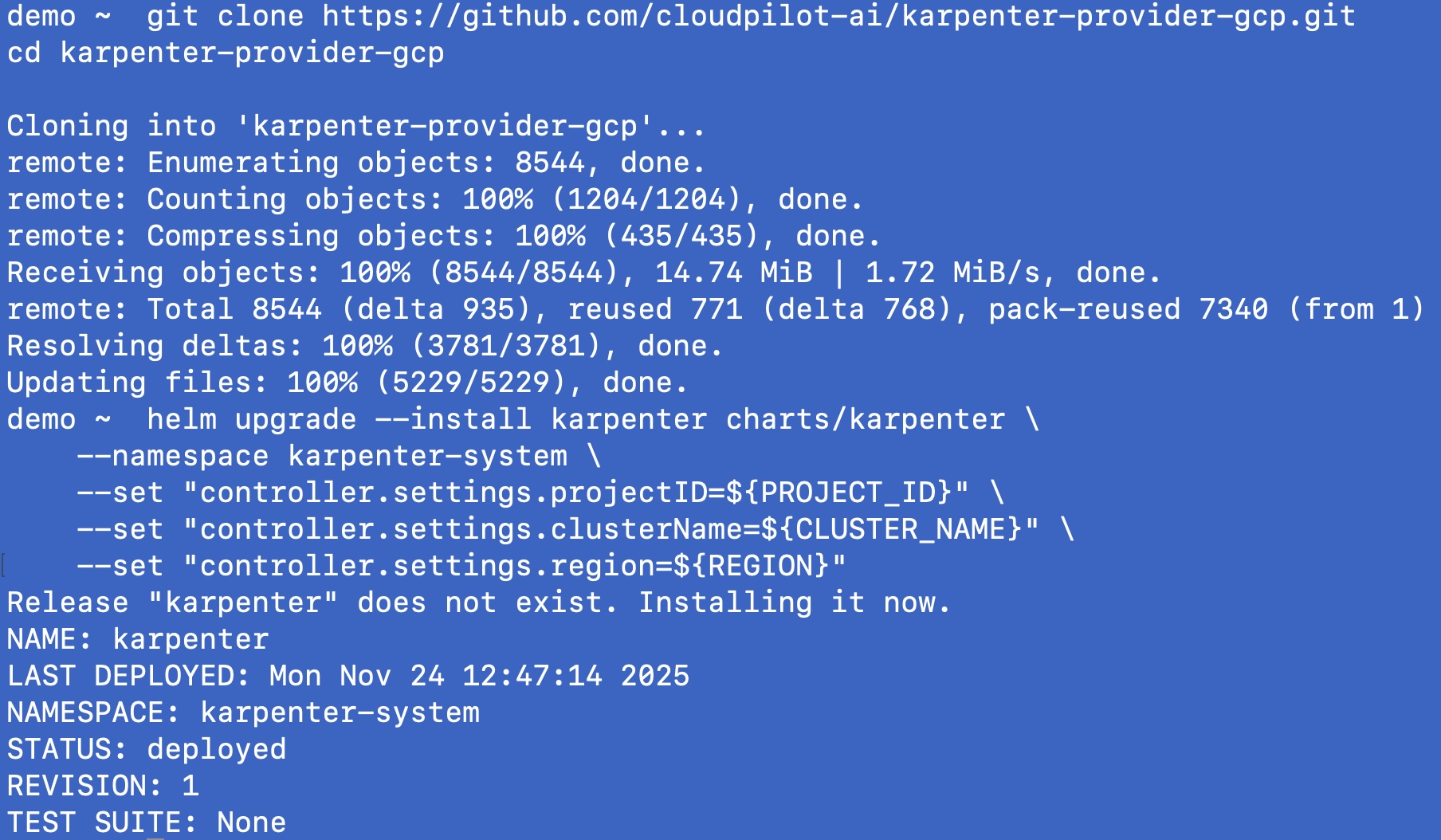

Since the GCP provider is in active development, we will clone the repository to get the latest charts.

# Create namespace

kubectl create namespace karpenter-system

# Clone the repository

git clone https://github.com/cloudpilot-ai/karpenter-provider-gcp.git

cd karpenter-provider-gcp

# Install via Helm

helm upgrade --install karpenter ./charts/karpenter \

--namespace karpenter-system --create-namespace \

--set "controller.settings.projectID=${PROJECT_ID}" \

--set "controller.settings.location=${ZONE}" \

--set "controller.settings.clusterName=${CLUSTER_NAME}" \

--set "credentials.enabled=false" \

--set "serviceAccount.annotations.iam\.gke\.io/gcp-service-account=${KARPENTER_SA_NAME}@${PROJECT_ID}.iam.gserviceaccount.com"

Output:

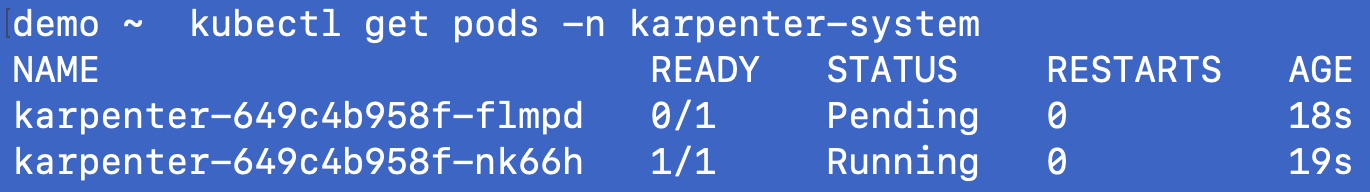

kubectl get pods -n karpenter-system

This is where the magic happens. We need to define what kind of nodes we want.

This resource tells Karpenter how to configure the GCP VMs (e.g., which OS image to use).

Create a file named nodeclass.yaml:

apiVersion: karpenter.k8s.gcp/v1alpha1

kind: GCENodeClass

metadata:

name: default

spec:

imageSelectorTerms:

- alias: ContainerOptimizedOS@latest

disks:

- category: pd-balanced

sizeGiB: 60

boot: true

serviceAccount: "karpenter-sa@saiyam-project.iam.gserviceaccount.com"

Note - Remember to update your projectID, In above case its: saiyam-project.

This resource tells Karpenter when and what limits to apply.

Create a file named nodepool.yaml:

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

nodeClassRef:

group: karpenter.k8s.gcp

kind: GCENodeClass

name: default

requirements:

- key: "karpenter.sh/capacity-type"

operator: In

values: ["on-demand", "spot"] # Let Karpenter pick Spot if available!

- key: "kubernetes.io/arch"

operator: In

values: ["amd64"]

limits:

cpu: 100

disruption:

consolidationPolicy: WhenEmptyOrUnderutilized

consolidateAfter: 1m

kubectl apply -f nodeclass.yaml

kubectl apply -f nodepool.yaml

Watch the Logs Check the Karpenter logs to see it making decisions in real-time.

Since we created a minimalistic cluster, there was one replica of Karpenter itself that was pending and as soon as we created the nodeclass and nodepool. It gets the pending pod, creates the instance and attaches it to the cluster. Below are the scaling logs

{"level":"INFO","time":"2025-11-24T09:34:09.295Z","logger":"controller","message":"found provisionable pod(s)","commit":"76d7872","controller":"provisioner","namespace":"","name":"","reconcileID":"66fa324d-8df9-4ea0-a305-7a6c75e51d4a","Pods":"karpenter-system/karpenter-646cc8c9c5-58lvw","duration":"75.015532ms"}

{"level":"INFO","time":"2025-11-24T09:34:09.295Z","logger":"controller","message":"computed new nodeclaim(s) to fit pod(s)","commit":"76d7872","controller":"provisioner","namespace":"","name":"","reconcileID":"66fa324d-8df9-4ea0-a305-7a6c75e51d4a","nodeclaims":1,"pods":1}

{"level":"INFO","time":"2025-11-24T09:34:09.329Z","logger":"controller","message":"created nodeclaim","commit":"76d7872","controller":"provisioner","namespace":"","name":"","reconcileID":"66fa324d-8df9-4ea0-a305-7a6c75e51d4a","NodePool":{"name":"default"},"NodeClaim":{"name":"default-7qv4m"},"requests":{"cpu":"681m","memory":"1918193920","pods":"8"},"instance-types":"c2d-highcpu-2, c2d-standard-2, c3-highcpu-4, c3d-highcpu-4, e2-medium and 10 other(s)"}

{"level":"INFO","time":"2025-11-24T09:34:09.563Z","logger":"controller","message":"Found instance template","commit":"76d7872","controller":"nodeclaim.lifecycle","controllerGroup":"karpenter.sh","controllerKind":"NodeClaim","NodeClaim":{"name":"default-7qv4m"},"namespace":"","name":"default-7qv4m","reconcileID":"9955389f-409a-4e80-ae1a-da8b37ffd737","templateName":"gke-karpenter-demo-karpenter-default-3ee7d541","clusterName":"karpenter-demo"}

{"level":"INFO","time":"2025-11-24T09:34:19.829Z","logger":"controller","message":"Created instance","commit":"76d7872","controller":"nodeclaim.lifecycle","controllerGroup":"karpenter.sh","controllerKind":"NodeClaim","NodeClaim":{"name":"default-7qv4m"},"namespace":"","name":"default-7qv4m","reconcileID":"9955389f-409a-4e80-ae1a-da8b37ffd737","instanceName":"karpenter-default-7qv4m","instanceType":"t2d-standard-1","zone":"us-central1-a","projectID":"saiyam-project","region":"us-central1","providerID":"karpenter-default-7qv4m","providerID":"karpenter-default-7qv4m","Labels":{"goog-k8s-cluster-name":"karpenter-demo","karpenter-k8s-gcp-gcenodeclass":"default","karpenter-sh-nodepool":"default"},"Tags":{"items":["gke-karpenter-demo-e64cf7e5-node"]},"Status":""}

{"level":"INFO","time":"2025-11-24T09:34:19.829Z","logger":"controller","message":"launched nodeclaim","commit":"76d7872","controller":"nodeclaim.lifecycle","controllerGroup":"karpenter.sh","controllerKind":"NodeClaim","NodeClaim":{"name":"default-7qv4m"},"namespace":"","name":"default-7qv4m","reconcileID":"9955389f-409a-4e80-ae1a-da8b37ffd737","provider-id":"gce://saiyam-project/us-central1-a/karpenter-default-7qv4m","instance-type":"t2d-standard-1","zone":"us-central1-a","capacity-type":"spot","allocatable":{"cpu":"940m","ephemeral-storage":"27Gi","memory":"2837194998","pods":"110"}}

{"level":"INFO","time":"2025-11-24T09:34:36.905Z","logger":"controller","message":"reconciling CSR","commit":"76d7872","controller":"csr-controller","controllerGroup":"certificates.k8s.io","controllerKind":"CertificateSigningRequest","CertificateSigningRequest":{"name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk"},"namespace":"","name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk","reconcileID":"039ca278-2201-40d1-92a8-242540442f33","name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk"}

{"level":"INFO","time":"2025-11-24T09:34:36.905Z","logger":"controller","message":"approving bootstrap CSR","commit":"76d7872","controller":"csr-controller","controllerGroup":"certificates.k8s.io","controllerKind":"CertificateSigningRequest","CertificateSigningRequest":{"name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk"},"namespace":"","name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk","reconcileID":"039ca278-2201-40d1-92a8-242540442f33","name":"node-csr-qH0T_GHbpKcjv54hSF3fdTGP3Ot0v8_ZJmEXTHDlXTk","username":"kubelet-nodepool-bootstrap"}

{"level":"INFO","time":"2025-11-24T09:34:42.109Z","logger":"controller","message":"registered nodeclaim","commit":"76d7872","controller":"nodeclaim.lifecycle","controllerGroup":"karpenter.sh","controllerKind":"NodeClaim","NodeClaim":{"name":"default-7qv4m"},"namespace":"","name":"default-7qv4m","reconcileID":"79900a0c-f95a-48d1-a8d5-fbc8bf93f067","provider-id":"gce://saiyam-project/us-central1-a/karpenter-default-7qv4m","Node":{"name":"karpenter-default-7qv4m"}}

{"level":"INFO","time":"2025-11-24T09:34:44.258Z","logger":"controller","message":"reconciling CSR","commit":"76d7872","controller":"csr-controller","controllerGroup":"certificates.k8s.io","controllerKind":"CertificateSigningRequest","CertificateSigningRequest":{"name":"csr-9zzqj"},"namespace":"","name":"csr-9zzqj","reconcileID":"4ba71e87-d6a9-4585-a2c3-c1acc619049f","name":"csr-9zzqj"}

{"level":"INFO","time":"2025-11-24T09:34:45.377Z","logger":"controller","message":"initialized nodeclaim","commit":"76d7872","controller":"nodeclaim.lifecycle","controllerGroup":"karpenter.sh","controllerKind":"NodeClaim","NodeClaim":{"name":"default-7qv4m"},"namespace":"","name":"default-7qv4m","reconcileID":"090158cd-ccd6-4bd2-bbad-cd19da3e87a1","provider-id":"gce://saiyam-project/us-central1-a/karpenter-default-7qv4m","Node":{"name":"karpenter-default-7qv4m"},"allocatable":{"cpu":"940m","ephemeral-storage":"24046317071","hugepages-1Gi":"0","hugepages-2Mi":"0","memory":"2864940Ki","pods":"110"}}

Verify the New Node, check your nodes. You should see a new node join the cluster shortly.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-karpenter-demo-default-pool-8ec24245-99h5 Ready <none> 3h19m v1.33.5-gke.1201000

karpenter-default-7qv4m Ready <none> 47s v1.33.5-gke.1201000

You now have Karpenter running on GCP! It works by observing the scheduling queue and directly calling the GCE API to launch instances that satisfy your pod's requirements. This bypasses the traditional rigidity of Managed Instance Groups.

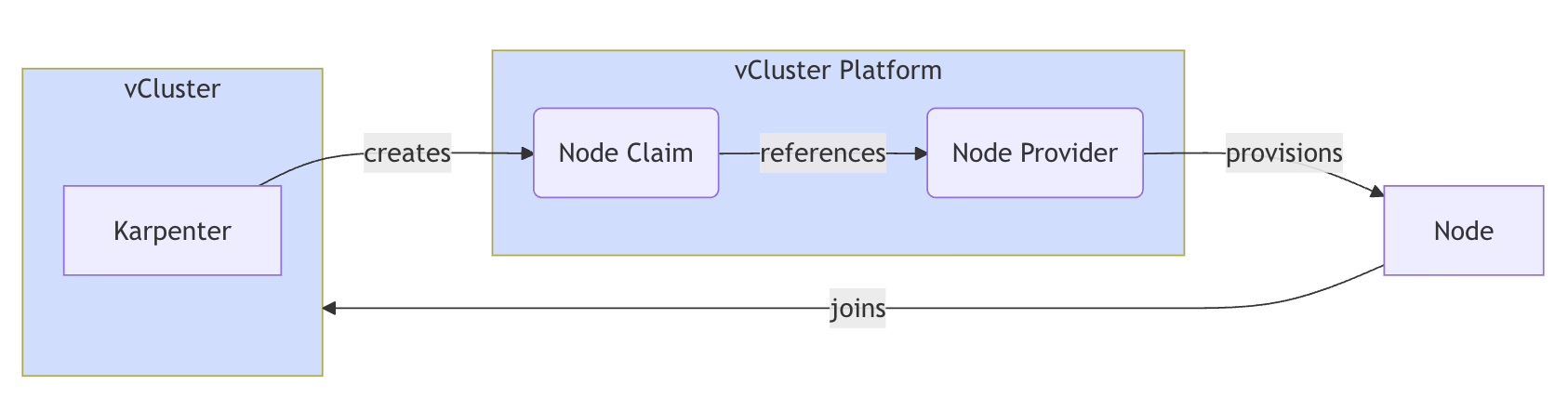

Although we have seen Karpenter in action with GCP, the support is limited and in alpha for GCP. However, this feature is available and supported for enterprise deployments within vCluster Auto Nodes, powered by the same open-source engine: Karpenter. But instead of just solving this for a single cloud provider such as AWS, Auto Nodes works across multiple Kubernetes environments and cloud providers. It can also support a multiple cloud environment, i.e., you can combine nodes from public cloud, private cloud, and even bare metal environments into a Kubernetes cluster.

Read more about vCluster Auto Nodes here.

Deploy your first virtual cluster today.