A New Foundation for Multi-Tenancy: Introducing vCluster Standalone

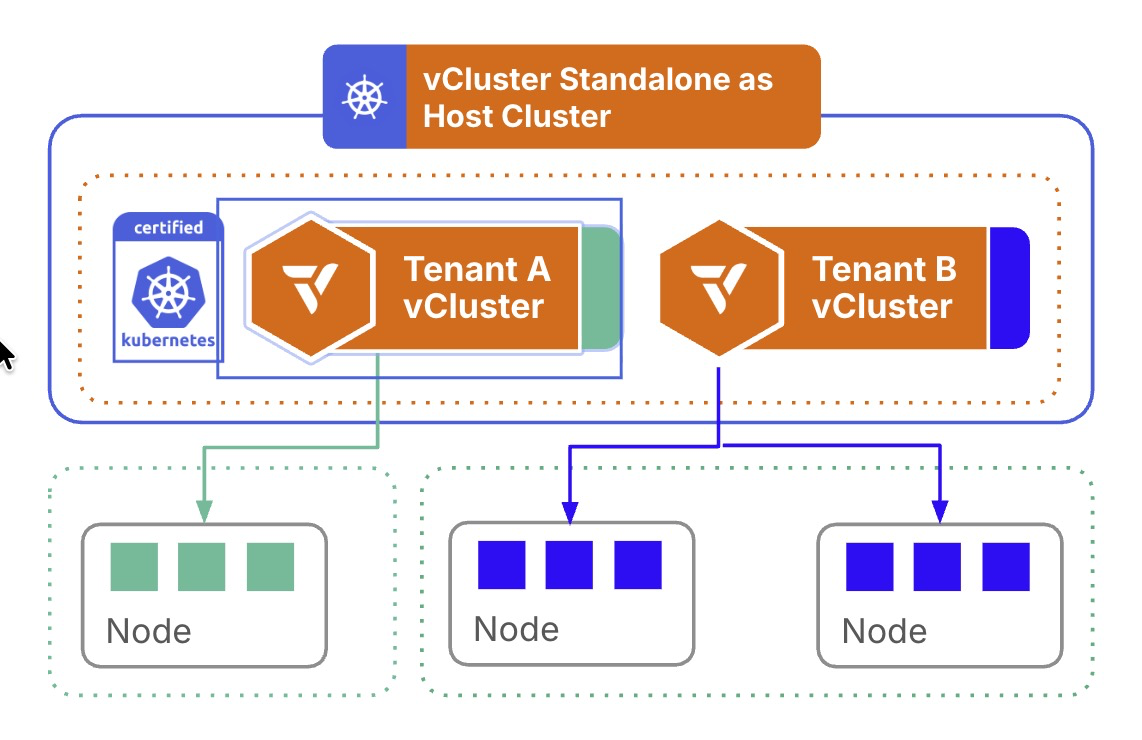

From the beginning, every Kubernetes operation started with a host cluster, whether it was K3s, EKS, Rancher, or Talos. With vCluster, teams gained the ability to layer virtual clusters on top of these hosts, bringing new levels of flexibility, isolation, and speed. This model has powered countless production deployments and continues to be the standard way organizations run Kubernetes multi-tenancy today.

As adoption grew, though, a new opportunity emerged. Managing both the host distribution and the virtual cluster layer sometimes introduced extra steps, different vendors, separate upgrade paths, and distinct operational processes. For many teams, that flexibility is a feature. But for others, the ability to consolidate host and virtual cluster into a single, unified foundation is even more compelling.

With the release of vCluster Standalone (v0.29), we’ve taken the next step in the tenancy spectrum: a vendor-supported way to bootstrap Kubernetes directly on bare metal or VMs without needing an existing host cluster. Instead of managing clusters across different providers, vCluster itself becomes the foundation.

Over the past few years, vCluster has steadily evolved to simplify multi-tenancy and reduce operational pain:

Now, with the release of vCluster Standalone (v0.29), the range extends even further. For the first time, vCluster removes the dependency on an external host cluster as you can install the standalone on your host, which becomes the foundation, bootstrapped directly on bare metal or VMs. No more “Cluster 1 problem,” where you have to figure out the underlying host when using virtual clusters

Most importantly, Standalone lets you use the full range of tenancy models for your virtual clusters without ever needing to worry about managing an external host cluster.

vCluster Standalone is free to use and runs with Private Nodes built-in, delivering strict isolation out of the box. On top of Standalone, you can create virtual clusters just as you would on any other host cluster, whether they are using the Shared Nodes tenancy model or Private Nodes.

When you want to install, Standalone functions as a SystemD service that initializes a full Kubernetes control plane directly on the target infrastructure. The process starts with an install script that downloads the vCluster binary from GitHub releases and sets it up as a SystemD unit as below:

export VCLUSTER_VERSION="v0.29.0"

sudo su -

curl -sfL https://github.com/loft-sh/vcluster/releases/download/${VCLUSTER_VERSION}/install-standalone.sh | sh -s -- --vcluster-name standalone

The script requires two components: access to the target server or VM and a vCluster YAML configuration file, as shown below:

controlPlane:

# Enable standalone

standalone:

enabled: true

# Optional: Control Plane node will also be considered a worker node

joinNode:

enabled: true

# Required for adding additional worker nodes

privateNodes:

enabled: true

During initialization, Standalone starts the essential Kubernetes control plane components: API Server, Embedded etcd, Kube Controller Manager, and Scheduler.

The system automatically installs kubectl for immediate cluster interaction and provisions default networking components, including CoreDNS for service discovery, kube-proxy for network routing, and Flannel as the default CNI (though alternative CNI solutions can be substituted).

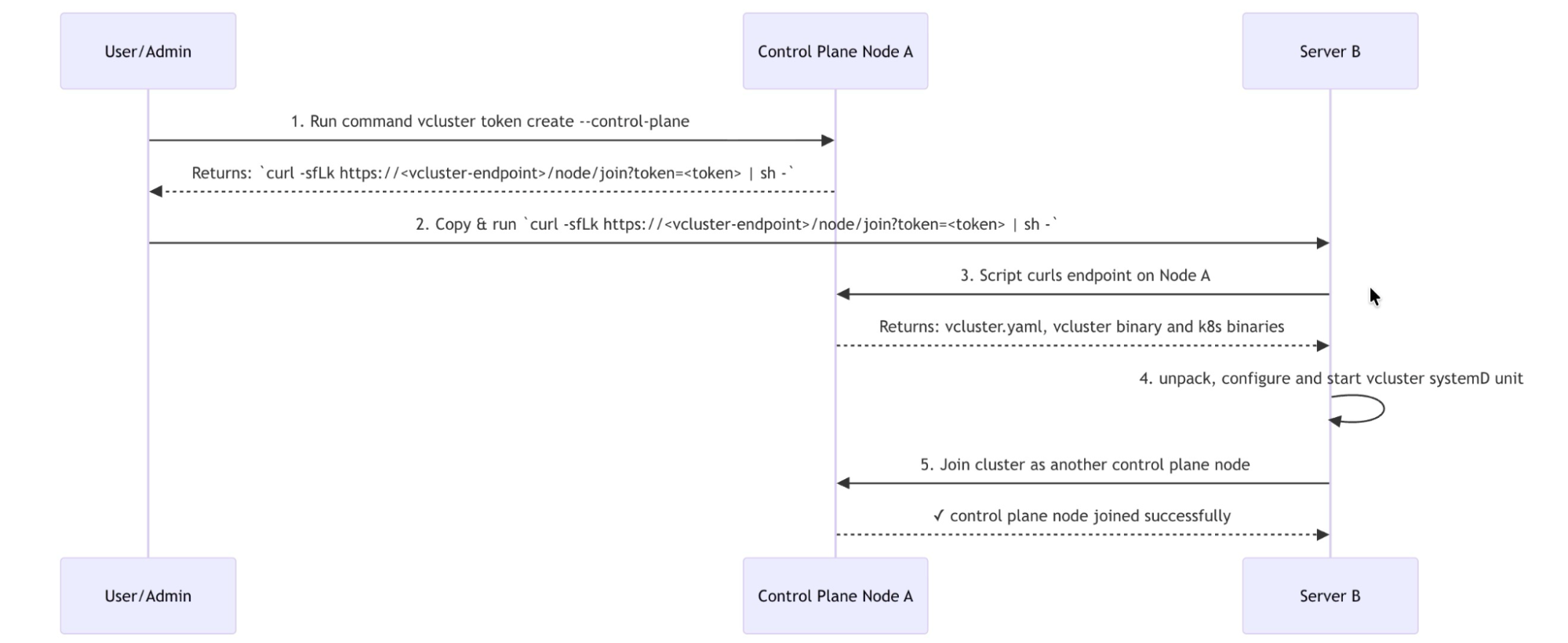

Once operational, Standalone functions as a complete Kubernetes cluster capable of hosting your standard workloads alongside the vCluster Platform and additional virtual clusters if required. This means you can easily use it for production environments, as Standalone supports multi-control-plane high availability, though this feature requires an enterprise license. HA setup involves a token-based joining mechanism where the initial control plane node generates join tokens for additional nodes. The entire process can be summarized in these steps:

The first node creates a "control plane bundle" containing the vCluster binary, Kubernetes components, and configuration, which new nodes download, extract, and use to join the cluster seamlessly.

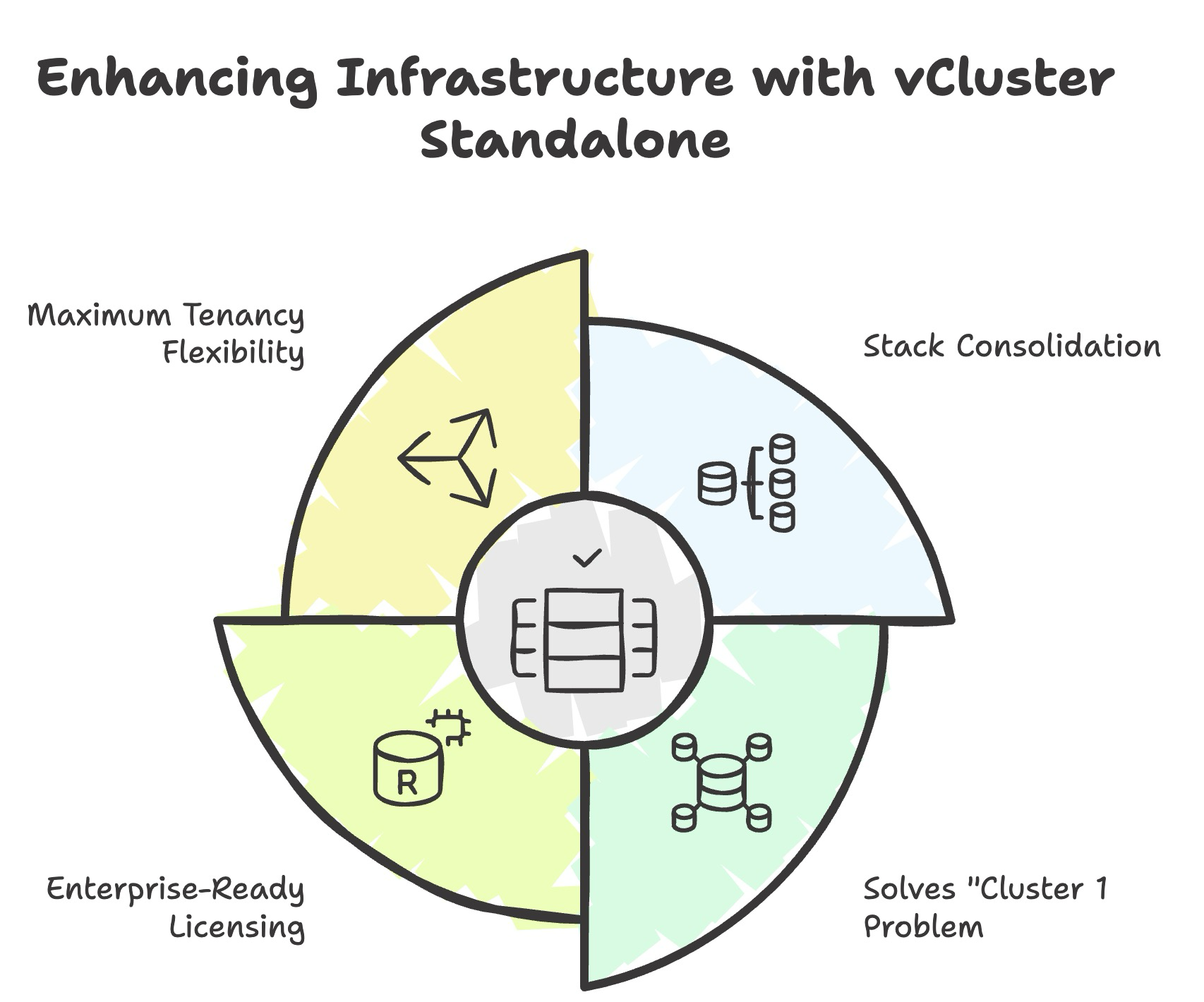

As organizations explore adopting vCluster Standalone, the question naturally arises: how does this benefit your infrastructure strategy? There are many advantages, but five critical areas where implementing Standalone delivers the most impact are as follows:

vCluster Standalone unifies Kubernetes operations by delivering both the host and virtual cluster layers under one vendor. Traditional setups require separate contracts, support, and security reviews for host clusters (Rancher, K3s, cloud) and virtual cluster platforms.

This creates overhead, slows procurement, and complicates compliance. Standalone removes this friction, providing unified support from bare metal through virtual clusters, streamlined legal processes, and simplified audits. The result: faster operations and reduced vendor management.

Historically, teams needed a Kubernetes distribution (OpenShift, Rancher/K3s, Talos, kubeadm) just to host vCluster, creating a complex bootstrapping burden. Standalone eliminates this by running directly on bare metal or VMs.

Teams no longer maintain expertise in multiple distros or manage separate host clusters, cutting complexity and accelerating adoption.

The free tier offers unlimited single-node deployments, ideal for dev, test, and CI use cases. Enterprise licensing unlocks HA control planes with HA etcd, ensuring production resilience. Bundled with your existing license, it’s a simple way to manage your host cluster.

With Standalone as the host, you can create virtual clusters using any tenancy model — Shared Nodes, Private Nodes, or (soon) Auto Nodes. This means teams can tailor isolation to their needs: use Shared Nodes for lightweight dev, Private Nodes for regulated or GPU workloads, and Auto Nodes for elastic scaling. All tenancy models run consistently on top of a single Standalone control plane, simplifying management while optimizing cost, compliance, and performance.

With standalone progressing quickly, it’s helpful to know what’s working now and what’s coming. Today, these features are up for you to try:

As this blog has covered, eliminating infrastructure dependencies while maintaining operational flexibility is critical in today's Kubernetes ecosystem. vCluster Standalone transforms the traditional approach by removing the need for external host clusters entirely, bringing direct bare metal and VM bootstrapping into your vCluster.

By solving the "Cluster 1 problem," Standalone eliminates the complexity of managing multiple vendors while supporting every tenancy model, from lightweight namespaces to dedicated private nodes—all within a unified solution. Whether you're building enterprise SaaS platforms or deploying bare metal GPU clusters, infrastructure management is no longer a multi-vendor challenge but a streamlined, single-vendor experience.

Learn more and get started:

Deploy your first virtual cluster today.