vCluster v0.27: Introducing Private Nodes for Dedicated Clusters

.png)

.png)

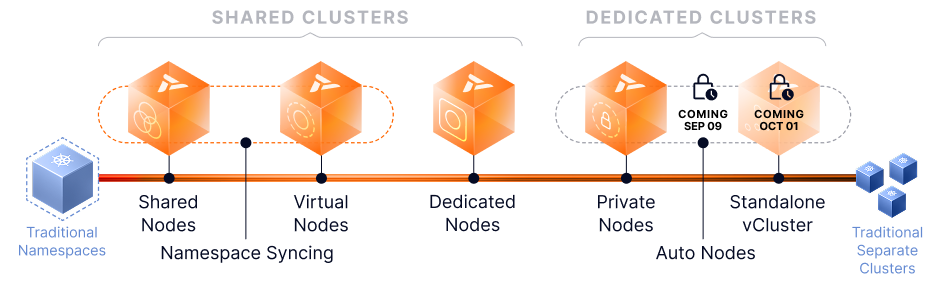

Tenancy requirements are no longer static. A startup may be comfortable with shared infrastructure now but might need dedicated control in the future. Similarly, a team might use shared nodes for development environments but require stronger isolation for production workloads.

vCluster is evolving to support the complete tenancy spectrum in Kubernetes, addressing the full range of needs as organizations grow their infrastructure beyond previous vCluster capabilities. With the release of vCluster v0.27, we introduce Private Nodes, a significant advancement toward fully hosted control planes for use cases that require dedicated compute and GPU resources and complete tenant isolation.

vCluster excels at creating virtual clusters within a host cluster, each with its own control plane. Because of the architecture with advanced syncer, performance bottlenecks and latency are very low. However, certain advanced isolation, performance, and security patterns required hardware-level separation that wasn’t feasible, such as a custom runtime for each tenant.

Organizations requiring full isolation and customized runtimes (for use cases like private cloud or regulated environments) were forced to run and manage separate Kubernetes clusters, sacrificing operational simplicity. The spectrum was missing tenant-owned worker nodes.

With v0.27, vCluster enables Private Nodes, completing the spectrum from simple namespaces to full virtual clusters to “bring your own node” cluster patterns. Private nodes extend on dedicated nodes by offering each tenant a completely isolated environment, both logically and operationally.

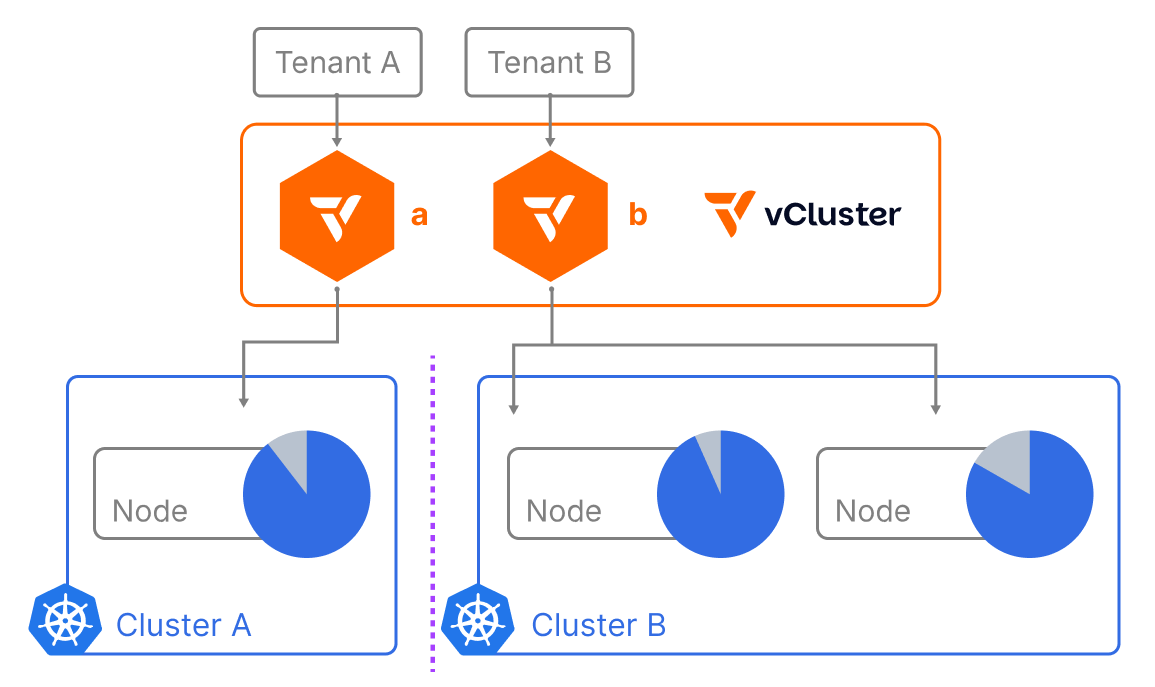

Each tenant operates within its own cluster with vCluster, with dedicated nodes in this configuration, with separate control and data planes, ensuring full privacy. No other tenant outside the virtual cluster can see or access these nodes, making them very useful for untrusted or external tenants.

The connection is made directly to a vCluster control plane, enabling tenants to use their own nodes—whether from cloud or on-prem providers—for compute, memory, and GPU resources. vCluster manages the control plane within the host cluster, and when private nodes are active, the syncer is disabled.

In essence, private nodes offer a unique blend of Kubernetes familiarity, operational simplicity, and uncompromising isolation. This means workloads run exclusively on these dedicated nodes, which is essential when direct hardware access, network performance, and data locality are critical.

Let’s examine how it works more closely.

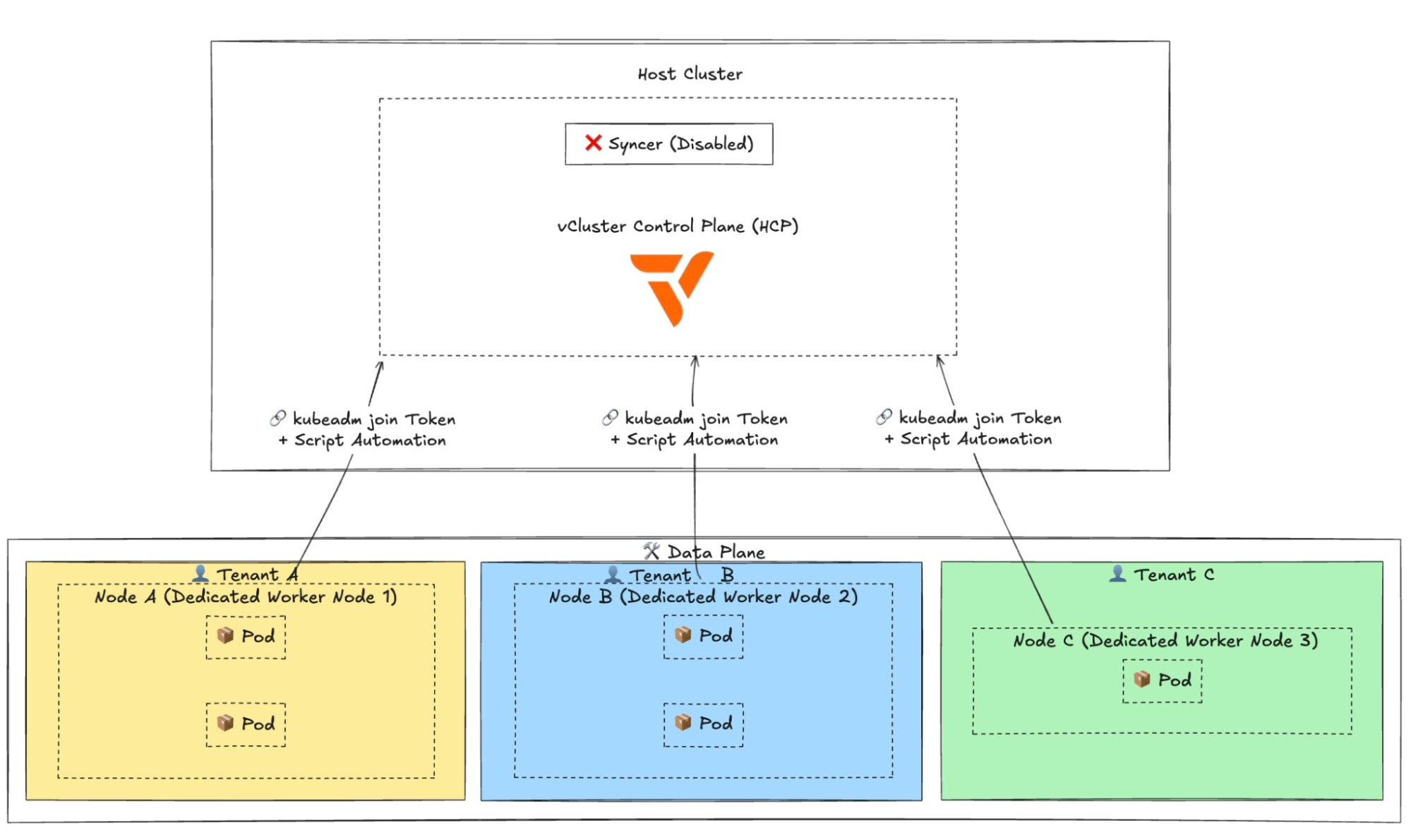

The internals of private nodes revolve around two main components: the data plane and the control plane. The data plane hosts the tenant’s workloads in the Nodes, while vCluster manages the control plane.

Node onboarding is fully automated through a background process that uses a script with kubeadm and a load balancer on your virtual machines. Tenants join worker nodes via a streamlined, kubeadm-style process that automatically manages token creation and execution, which is how Kubernetes does Node joining natively:

# Connect to vcluster and get join command

vcluster connect vcluster-a

vcluster token create

# SSH into node and run join command

curl -sfLk "https://<vcluster-endpoint>/node/join?token=<token>" | sh -

The diagram shows how a host cluster running a vCluster control plane connects with different tenants. Nodes are connected via automated kubeadm join tokens and scripts to three dedicated worker nodes in the data plane, each belonging to a different tenant (Tenant A, Tenant B, and Tenant C).

Each tenant operates its own node (Node A, Node B, and Node C), running its isolated workloads. This setup ensures secure, production-grade isolation without adding new Custom Resource Definitions (CRDs), keeping operations lightweight and maximizing hardware utilization.

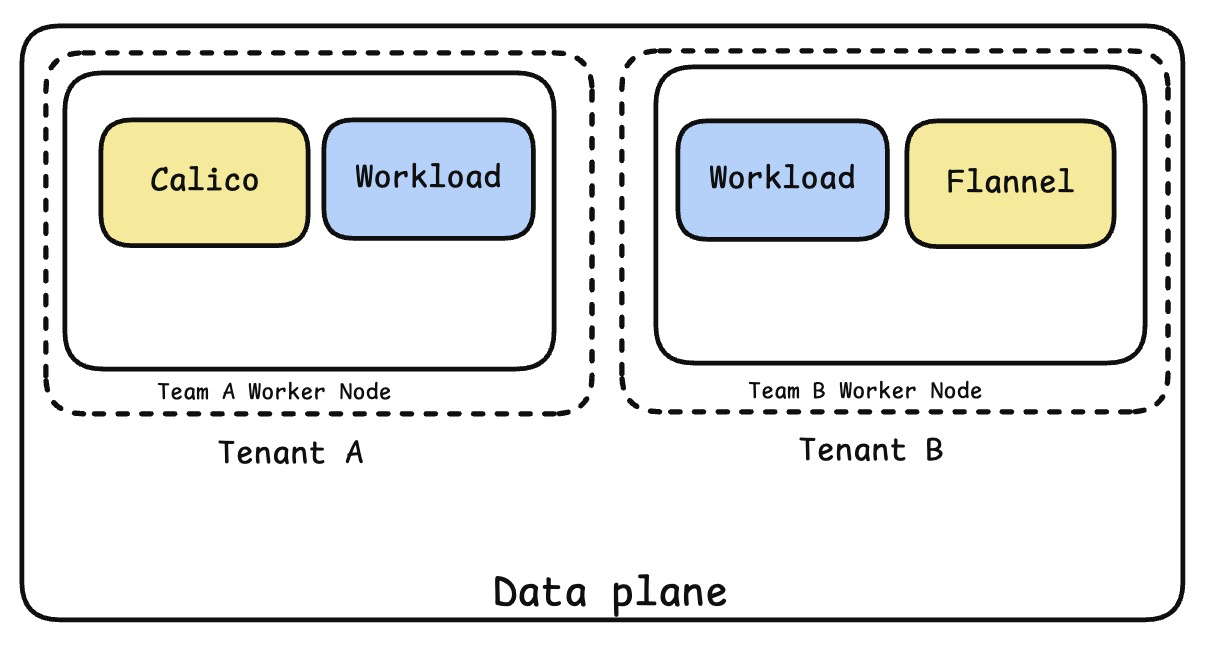

Additionally, for each of your tenants, you now have the infrastructure flexibility to allow tenants to bring their own Container Runtime Interface (CRI), Container Storage Interface (CSI), Container Network Interface (CNI), such as when Calico and Flannel are used simultaneously for different tenants:

You can also isolate GPU operators or other custom integrations without needing cluster-wide definitions, which were previously only possible with separate clusters and are costly due to control plane charges.

Private Nodes introduce a new way to run Kubernetes workloads by clearly separating the control plane from the compute infrastructure. In this model, the control plane stays in a secure, managed host environment, while all workload execution occurs on dedicated, tenant-owned nodes. These help unlock use cases like:

Organizations are increasingly acquiring large infrastructure (GPUs/CPUs), but optimizing hardware use across tenants remains challenging. Large models need full-node access to raw compute, which was hard until the Private Nodes pattern appeared. This method provides high-performance, data-local workloads without the network performance issues often seen with virtualized hardware.

By running directly on tenant-owned nodes, workloads achieve consistent throughput, direct hardware access, and better efficiency, ideal for AI/ML training, HPC, and other demanding compute tasks.

In many hosted Kubernetes models, tenants face restrictions on using custom drivers and operators. The Private Nodes pattern addresses this by combining a managed control plane with customer-owned compute resources. Tenants can now run their own versions of GPU drivers, CNIs, CSIs, and runtime components without being limited by other tenants’ configurations.

This allows for support of diverse hardware, accelerators, and software stacks while keeping the simplicity of a fully managed Kubernetes control plane.

In compliance heavy industries such as healthcare, finance, or AI/ML research, there is often a need for strict workload isolation, data locality, and security assurances. Private Nodes offer complete node isolation, ensuring workloads operate on unshared, tenant-owned hardware while maintaining centralized control.

This setup supports sensitive, untrusted, or custom workloads without risking cross-tenant interference. It is especially valuable for regulated environments and research labs that handle proprietary datasets or experimental models requiring maximum security, consistent performance, and physical resource ownership.

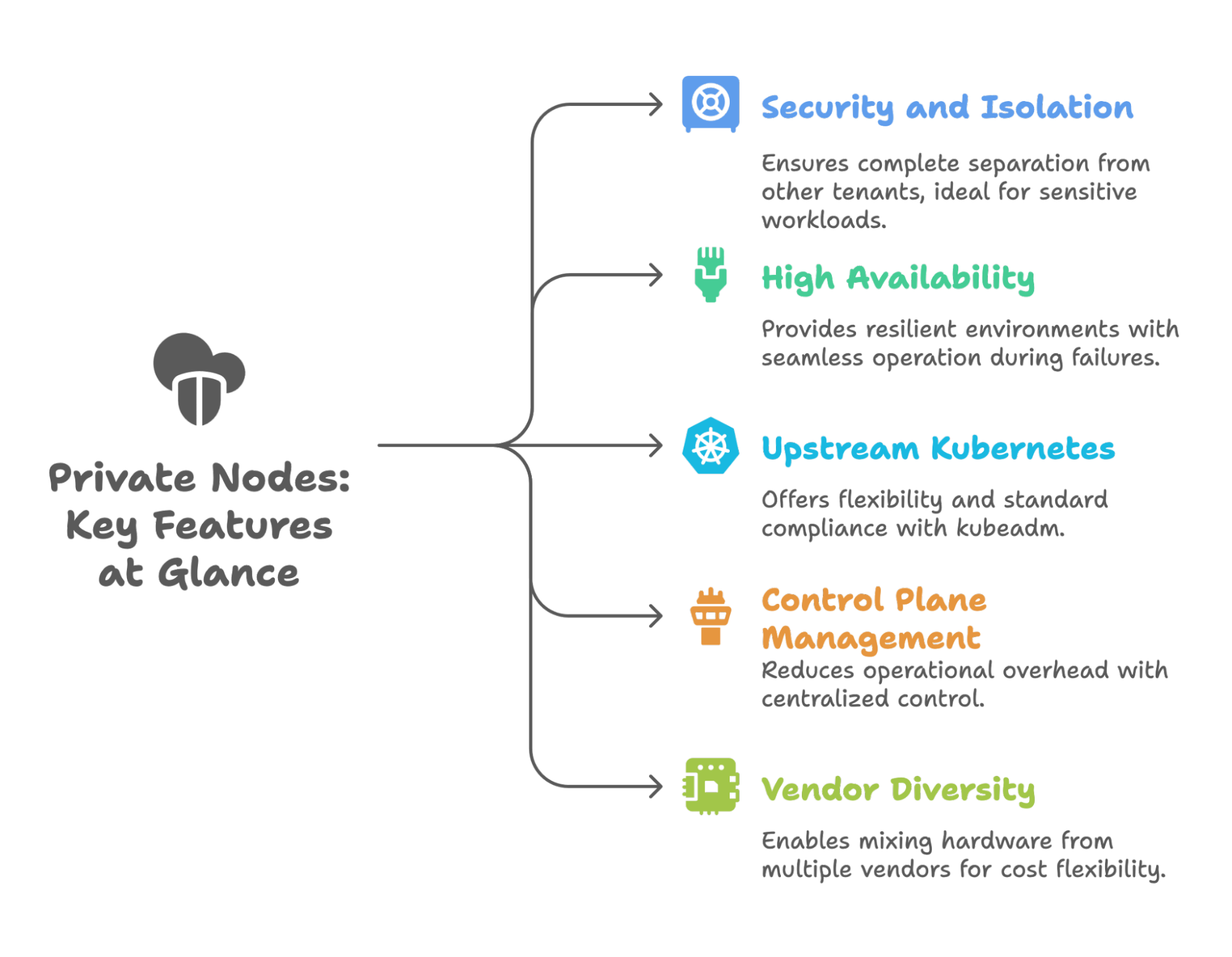

With these few use cases, you can see how diverse workloads can be supported. It's especially important when workloads require deterministic performance, custom runtimes, or compliance-driven isolation to enable new operational patterns. To go through the benefits:

Workloads run on dedicated, tenant-owned nodes, ensuring complete separation from other tenants. This isolation is ideal for untrusted or sensitive workloads in regulated or high-security environments, and as you saw in a few use cases, it provides full utilization.

Tenants can fully utilize their own hardware without contention from others. This maximizes performance efficiency and cost-effectiveness for compute-intensive workloads.

Private Nodes deliver resilient Kubernetes environments where workloads continue running seamlessly even during failures. In our testing, deleting the master control plane pod triggered automatic leader election and rapid redeployment, with no workload disruption.

This ensures that existing and new workloads remain fully operational, combining control plane stability in the host cluster with tenant-owned compute for minimal downtime and predictable recovery.

Private nodes utilize the native method and leverage upstream Kubernetes tools with kubeadm for flexibility and standard compliance. This approach makes integration and operational management straightforward and vendor-neutral.

With that, you also get fast Node (Re-)Allocation as you only need to run one command to move the Node from one tenant to another.

The control plane remains centrally managed in the host cluster, reducing operational overhead. Multi-tenancy is still possible, but with clear workload separation at the node level.

Each tenant can choose their own network, storage, and GPU operators. This allows tailored performance tuning and compatibility with diverse hardware requirements.

Private Nodes enable mixing hardware from multiple vendors within a single Kubernetes control plane. This provides cost flexibility, hardware diversity, and vendor-specific optimization. If you need specific hardware from a vendor that is ideal for your use case, simply add your node, and you’re ready to go.

You won’t need to create or manage clusters with that vendor separately.

If Private Nodes sounds exciting, we have an even more powerful lineup of features coming soon. The latest addition to our roadmap is Auto Nodes – our EKS-style Auto Mode that offers intelligent, automated node management right within the vCluster ecosystem.

Auto Nodes embeds managed Karpenter for automatic scaling and bin-packing. Virtual clusters create NodeClaims on demand and return underutilized nodes to shared pools, so you can now get proper utilization and management with different node classes.

Register on our launch site so you don’t miss the updates.

In a landscape dominated by many Kubernetes services, adopting a tool that expands and covers your use cases is vital as your organization grows. The addition of Private Nodes in the vCluster Ecosystem now allows you to achieve that balance. With a few clicks, you can start with one form of multi-tenancy and reach a different spectrum of highly isolated and regulated multi-tenancy without changing your overall architecture.

Private Nodes empower engineering teams, GPU cloud platforms, and SaaS providers to offer truly isolated, custom Kubernetes clusters without maintaining complex control plane infrastructure. This breakthrough enables organizations to deliver dedicated cluster benefits while preserving operational simplicity.

Whether building multi-tenant SaaS platforms, GPU cloud services, or managing enterprise workloads with strict compliance, Private Nodes bridge the gap between shared infrastructure limitations and full cluster management overhead.

Join our webinar to see Private Nodes in action.

Deploy your first virtual cluster today.