Introduction

Kubernetes makes it easy to deploy and scale applications, but as organizations grow, they need a way for multiple teams or customers to share the same infrastructure without stepping on each other’s toes.

That’s where multi-tenancy comes in.

Multi-tenancy means several independent users or groups (tenants) operate within a shared cluster while maintaining logical isolation. Done right, it enables:

- Better resource efficiency

- Consistent security and governance

- Faster environment provisioning

The problem?

Kubernetes wasn’t built with strong multi-tenant isolation in mind.

Namespaces provide basic separation but weak boundaries.

Running a dedicated cluster per tenant solves isolation but adds cost and operational overhead.

This guide explores how multi-tenancy evolved in Kubernetes, the limits of existing models, and how vCluster bridges the gap, combining control-plane isolation with shared infrastructure for secure, efficient multi-tenant platforms.

The Evolution of Multi-Tenancy

Kubernetes has changed how organizations build and share infrastructure. But the idea of multi-tenancy, allowing multiple teams or applications to share the same resources, has evolved through several stages, each with its own benefits and trade-offs.

1. From Physical Isolation to Logical Sharing

Before containers and Kubernetes, teams managed workloads on dedicated servers or virtual machines.

This offered strong isolation but came with high cost and limited scalability.

Each environment had to be configured manually, and capacity was often underutilized.

The move toward Kubernetes brought logical sharing instead of physical separation.

Namespaces became the first mechanism to allow multiple teams or environments to coexist inside a single cluster.

It simplified resource sharing and made it possible to deploy hundreds of workloads efficiently on shared infrastructure.

However, this model also meant that all tenants shared the same control plane, the same API server, scheduler, and controllers.

While it worked for small-scale setups, it created challenges when teams needed autonomy or stronger security boundaries.

2. Emergence of Dedicated Clusters

To gain stronger separation, many organizations moved from namespace-based setups to dedicated clusters.

In this model, each team or customer receives their own Kubernetes cluster with a fully isolated control plane.

This improves security, governance, and stability, but introduces high operational overhead.

Each cluster needs to be created, upgraded, and monitored individually, leading to what’s often called “cluster sprawl”.

While this approach guarantees the highest level of isolation, it significantly increases management complexity and cost, especially when scaling to hundreds of teams or customers.

3. Virtual Clusters as the Middle Ground

To bridge the gap between shared clusters and dedicated clusters, the concept of virtual clusters emerged.

A virtual cluster provides each tenant with its own Kubernetes control plane while sharing the same underlying data plane (nodes and networking).

This setup combines the best of both worlds, strong isolation and efficient infrastructure utilization.

Virtual clusters make it possible to achieve the autonomy of dedicated clusters while avoiding the duplication of resources and operational overhead.

They represent a natural step forward in the evolution of Kubernetes multi-tenancy, providing better boundaries, improved scalability, and a simplified operational model for platform teams.

The Shift in Focus

The evolution from physical servers to virtual machines, to shared clusters, and now to virtual clusters reflects a single, ongoing trend:

The pursuit of balance between isolation and efficiency.

Kubernetes multi-tenancy is no longer about whether teams share resources; it’s about how they share them safely, predictably, and at scale.

The Multi-Tenancy Problem Space

Kubernetes was designed as a shared system, one where multiple applications or users could deploy and scale workloads on the same infrastructure.

However, as organizations began to use Kubernetes for larger and more complex platforms, new challenges appeared around isolation, security, and governance.

Multi-tenancy makes efficient use of resources possible, but it also creates boundaries that must be carefully defined and enforced.

1. Weak Control Plane Isolation

In most shared clusters, all tenants use the same Kubernetes control plane, meaning the same API server, scheduler, and controllers.

This introduces a single point of failure.

If one tenant installs a misconfigured Custom Resource Definition (CRD) or controller, it can affect every other tenant in the cluster.

Namespaces offer logical isolation, but they don’t prevent tenants from accessing shared cluster-wide resources, which can lead to cross-tenant interference or even outages.

2. Security and Access Management

Shared clusters often struggle with balancing tenant access and cluster-wide security.

Kubernetes Role-Based Access Control (RBAC) helps define permissions within namespaces, but it doesn’t fully prevent escalation risks.

If a tenant gains higher privileges or misconfigures roles, they might inadvertently access resources belonging to others.

This risk becomes more visible as the number of teams, applications, or environments increases, particularly when CRDs, admission webhooks, or cluster-scoped controllers are involved.

3. Resource Contention and Fairness

Another common issue is resource contention.

When multiple tenants share nodes, a single workload can consume more CPU, memory, or storage than expected, impacting performance for others.

Even with resource quotas and limits, Kubernetes does not enforce fairness across namespaces.

This can result in one tenant’s activity reducing performance or availability for the rest, a scenario often described as the “noisy neighbor” problem.

In GPU-heavy environments, such as AI or ML workloads on bare metal clusters, this problem becomes more critical.

GPU scheduling and sharing are hard to manage without compromising performance or tenant boundaries.

4. Operational Complexity

As more tenants join a shared cluster, operational complexity increases.

Platform teams need to manage namespaces, resource policies, network configurations, and access control for each tenant.

Over time, this creates administrative bottlenecks, as even small configuration errors can impact multiple tenants simultaneously.

For example, a team introducing a CRD or updating an admission webhook may unknowingly break workloads for others.

Ensuring consistent governance across all tenants becomes increasingly difficult.

5. Cost and Scalability Trade-Offs

Running a separate cluster for every team or customer improves isolation, but it also multiplies operational and infrastructure costs.

Each cluster has to maintain its own control plane components, API server, scheduler, etcd, and controllers.

This leads to cluster sprawl, where managing hundreds of nearly identical clusters becomes unmanageable.

On the other hand, shared clusters reduce cost but increase risk.

Without the right isolation mechanisms, teams can’t work independently without affecting others.

The Core Challenge

These issues highlight the core challenge of Kubernetes multi-tenancy:

How do you share a cluster efficiently while ensuring each tenant remains secure, autonomous, and isolated?

Existing models like namespaces and dedicated clusters only solve parts of the problem.

Namespaces are efficient but weakly isolated; dedicated clusters are secure but expensive and hard to manage.

This ongoing trade-off is what drives the search for better, more scalable models of multi-tenancy, and sets the stage for control-plane isolation as the next logical step in Kubernetes evolution.

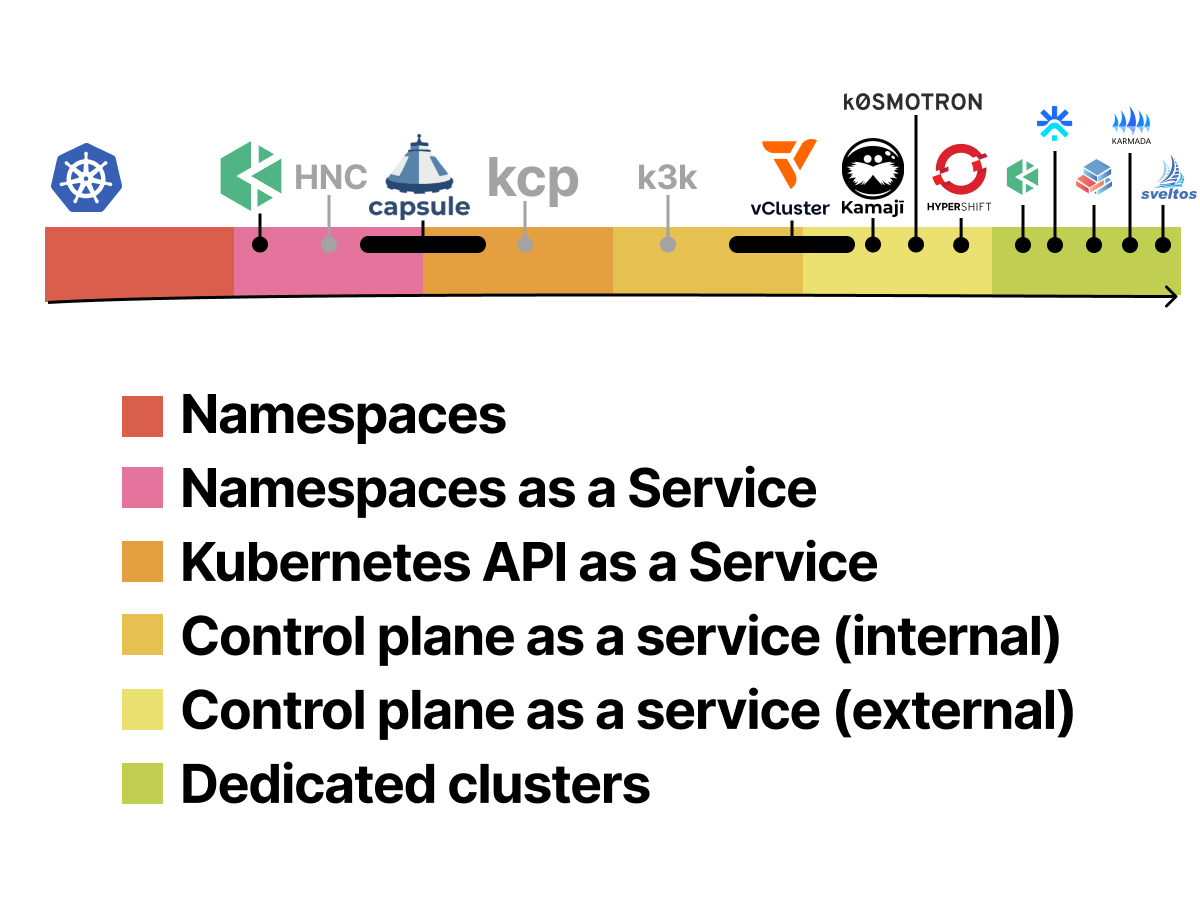

Existing Tenancy Models and Their Trade-Offs

There is no single way to implement multi-tenancy in Kubernetes.

Over time, several tenancy models have emerged, each with its own trade-offs in isolation, management, and cost.

Choosing the right model depends on how much control, autonomy, and efficiency an organization needs.

1. Namespace-Based Multi-Tenancy

Namespaces are the simplest and most common approach to multi-tenancy in Kubernetes. Each tenant operates in a separate namespace within the same cluster, sharing the same API server, scheduler, and underlying infrastructure.

This model provides lightweight isolation using Kubernetes primitives like RBAC, resource quotas, and network policies.

It’s easy to set up, efficient, and works well for smaller teams or internal environments where full isolation isn’t required.

However, because tenants share the same control plane and cluster-scoped resources, this model has clear limitations:

- Tenants cannot install CRDs or controllers independently.

- A misconfigured object or operator can affect the entire cluster.

- Security boundaries rely heavily on strict RBAC and policy management.

Best for: Development, testing, and smaller internal teams where speed and efficiency matter more than strong isolation.

2. Hierarchical Namespace Controller (HNC)

The Hierarchical Namespace Controller (HNC) extends the namespace model by allowing namespaces to form parent-child relationships.

It gives platform teams a structured way to delegate control, letting tenants manage sub-namespaces while enforcing policies from a higher level.

HNC improves delegation but doesn’t solve the shared control plane issue.

All tenants still operate under the same cluster API, meaning CRD and operator conflicts can still occur.

Best for: Large organizations with nested team structures that need controlled delegation within a shared cluster.

3. Dedicated Cluster per Tenant

The cluster-per-tenant approach gives each tenant a completely separate Kubernetes cluster, with its own API server, etcd, and controllers.

This provides maximum isolation, as no control-plane components are shared.

While this model eliminates cross-tenant interference and simplifies security, it comes with significant trade-offs:

- Each cluster consumes dedicated compute and control-plane resources.

- Operations like upgrades, monitoring, and backups multiply across tenants.

- Infrastructure costs rise sharply as the number of clusters increases.

This model offers strong boundaries but introduces heavy management overhead, often referred to as “cluster sprawl.”

Best for: Highly regulated industries, external customer environments, or workloads requiring strict data isolation.

4. Virtual Clusters

To bridge the gap between lightweight namespaces and heavy dedicated clusters, the concept of virtual clusters emerged.

A virtual cluster provides each tenant with its own Kubernetes control plane (API server, scheduler, controllers) running inside a shared host cluster.

From the tenant’s perspective, it feels like a full Kubernetes cluster.

From the operator’s perspective, workloads from multiple virtual clusters share the same underlying node pool and networking.

This design achieves a balance between isolation and cost:

- Tenants can create and manage CRDs and controllers independently.

- Platform teams can maintain centralized governance and efficiency.

- Provisioning is fast, and infrastructure usage remains optimized.

Best for: Platforms that need strong logical isolation without duplicating infrastructure, such as SaaS platforms, large internal developer environments, or CI/CD systems.

Trade-Off Summary

Each model represents a trade-off among three key factors: isolation, cost, and management complexity.

Namespace-based models are easy but weakly isolated; dedicated clusters are secure but expensive; virtual clusters offer a middle ground that scales better in modern environments.

Each of these models offers a different balance of flexibility and control.

But as platforms grow and the number of tenants increases, traditional approaches quickly hit operational or cost limits, which is why the industry continues to shift toward control-plane isolation as the next standard for scalable multi-tenancy.

For a technical, side-by-side comparison of all three tenancy models, read the full analysis here.

Why Traditional Solutions Fall Short

Most Kubernetes teams begin with either namespace-based isolation or dedicated clusters.

Both are proven models, but when multi-tenancy scales, their weaknesses become clear.

They either compromise isolation for efficiency or trade efficiency for isolation, leaving platform teams stuck managing complexity on one end or risk on the other.

1. Namespace Isolation: Lightweight but Risky

Namespaces are the easiest way to start with multi-tenancy, but they rely on logical separation only.

All tenants share the same API server, scheduler, and controllers.

This means that:

- Any CRD, admission webhook, or controller applied at the cluster level affects all tenants.

- A misconfiguration or bug in one tenant’s workload can impact others.

- Resource quotas help manage usage but cannot guarantee isolation or stability.

Namespaces work well for internal teams or smaller workloads but don’t scale when tenants need to run their own custom resources or operators.

In large environments, this shared control plane quickly becomes a single point of failure.

2. Dedicated Clusters: Secure but Expensive

Giving each tenant their own cluster solves isolation but replaces one problem with another, management overhead.

Every cluster comes with its own control plane components, requiring separate provisioning, upgrades, and monitoring.

This increases infrastructure cost and multiplies the operational effort.

As the number of clusters grows, so does the complexity:

- Keeping cluster configurations consistent becomes harder.

- Security and governance policies must be replicated everywhere.

- Maintaining networking, logging, and observability stacks across dozens or hundreds of clusters becomes a burden.

This approach ensures strong boundaries but creates what’s commonly referred to as “cluster sprawl.”

3. Virtual Machines: Legacy-Level Overhead

Some teams still rely on VM-based tenancy for isolation.

While this offers hardware-level separation, it sacrifices the speed and agility that containers and Kubernetes provide.

VMs are resource-heavy, slower to start, and harder to manage in dynamic cloud-native environments.

As multi-tenancy in Kubernetes evolved, virtualization at the infrastructure layer became less practical compared to container-native solutions.

4. The “Noisy Neighbor” Problem

Even with well-managed shared clusters, resource contention remains a persistent issue.

When tenants share the same nodes, one workload can monopolize CPU, memory, or network resources, degrading performance for others.

Kubernetes provides quotas and limits, but they work only within namespaces and don’t ensure fairness across tenants.

For GPU workloads on bare metal clusters, this problem becomes even more visible.

Without isolation at the control-plane or node level, performance drops and debugging becomes difficult.

5. Operational and Governance Challenges

Traditional models make it difficult to maintain consistent governance as clusters scale.

Shared environments require fine-grained RBAC policies, network restrictions, and strict coordination to prevent cross-tenant conflicts.

Dedicated clusters, on the other hand, fragment operations, forcing platform teams to maintain separate monitoring and policy enforcement layers for each cluster.

Neither approach provides the right balance between autonomy, visibility, and control.

The Core Limitation

Ultimately, traditional approaches to multi-tenancy fail because they all share a common limitation: a single control plane or too many of them.

- Namespace-based models rely on one shared control plane, creating risk and dependency.

- Dedicated clusters replicate control planes endlessly, creating cost and operational drag.

This is the trade-off Kubernetes teams have been trying to escape:

how to provide tenants with their own control plane without multiplying infrastructure.

That challenge sets the stage for the next phase in multi-tenancy, control-plane isolation with shared infrastructure, which is exactly where virtual cluster architectures redefine what’s possible in Kubernetes. If you are struggling with these very real multi-tenancy challenges, find out if you are doing Kubernetes Multi-tenancy the hard way

vCluster, A Better Multi-Tenancy Model

Traditional Kubernetes tenancy models force teams to choose between simplicity and isolation.

Namespaces are fast and efficient but weakly isolated, while dedicated clusters provide strong boundaries at the cost of scale and manageability.

To bridge this gap, a new approach emerged, one that isolates control planes without duplicating infrastructure: vCluster.

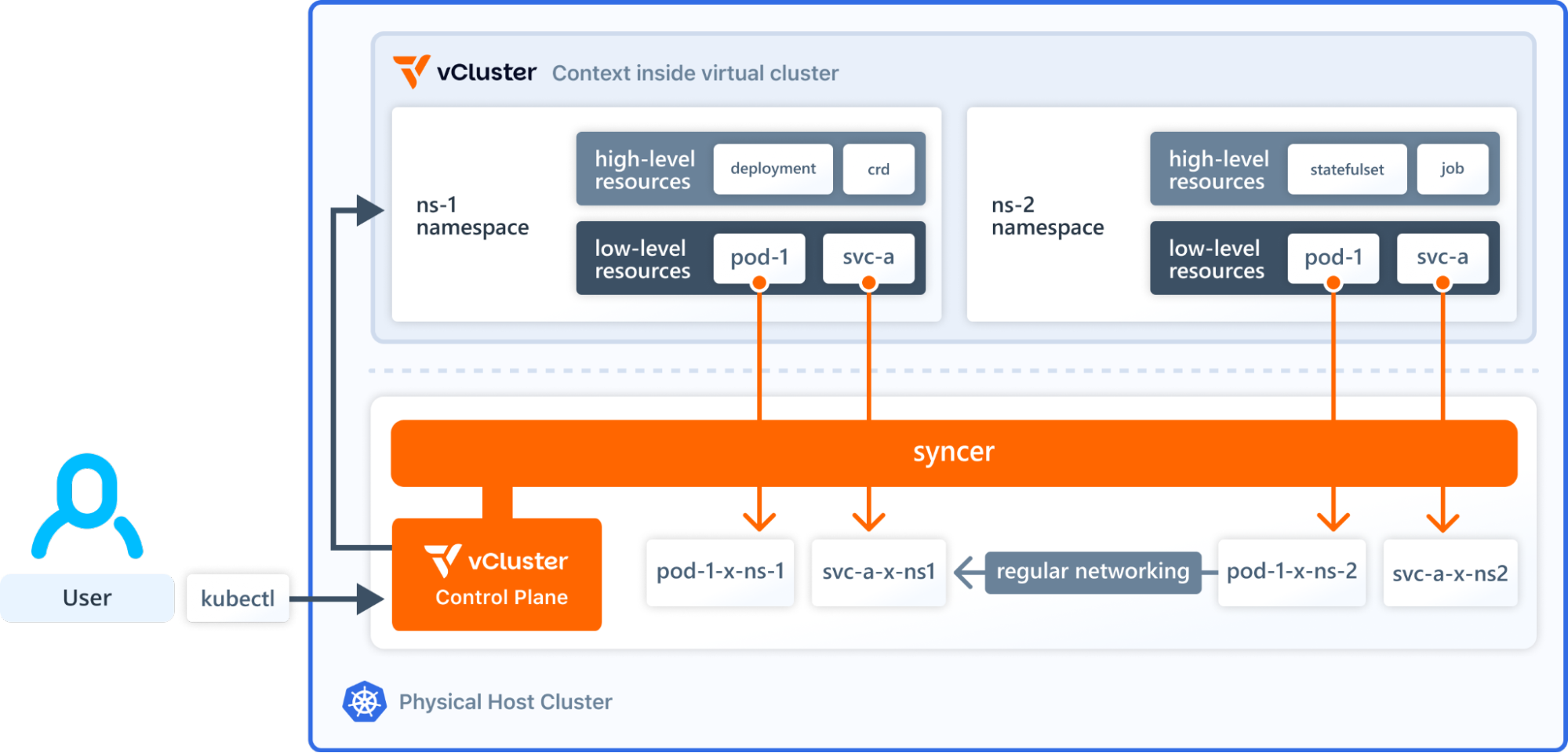

1. What Is vCluster

vCluster is a lightweight Kubernetes distribution that lets you create fully functional virtual clusters inside a host Kubernetes cluster.

Each vCluster runs its own API server, scheduler, and controllers, giving tenants the experience and autonomy of a standalone cluster while still sharing the same underlying compute and networking resources.

From the tenant’s perspective, it behaves exactly like a standard Kubernetes cluster.

From the platform team’s perspective, all workloads from multiple vClusters run efficiently on the host cluster’s nodes, maximizing resource utilization while maintaining strong logical separation.

This design enables teams to run hundreds or even thousands of isolated environments without the overhead of managing separate physical clusters.

2. How It Works

When a vCluster is created, it launches a new control plane (API server, scheduler, and controllers) as pods inside the host cluster.

Workloads deployed into that vCluster are automatically mirrored to the host cluster, where they run alongside workloads from other tenants, all under Kubernetes-native scheduling and isolation policies.

Tenants can:

- Deploy workloads using kubectl or Helm like in any regular cluster

- Install custom CRDs and controllers safely, without affecting others

- Configure RBAC, namespaces, and quotas within their own vCluster independently

Meanwhile, platform teams retain control from the host cluster, defining global security, resource policies, and cost boundaries.

3. Why It’s Different

Unlike namespaces or shared clusters, vCluster separates the control plane, ensuring that:

- Tenants operate in isolated Kubernetes environments

- CRD conflicts, admission webhooks, or misconfigured controllers stay contained

- Platform admins maintain governance without managing dozens of full clusters

This provides the best of both worlds, strong isolation and shared efficiency.

4. Efficiency at Scale

vCluster’s architecture allows massive scale without cluster sprawl.

Creating a new vCluster takes seconds, not minutes.

All vClusters share the host cluster’s nodes and CNI networking, which dramatically reduces infrastructure overhead.

In environments like CI/CD pipelines, SaaS platforms, or internal developer portals, vCluster enables teams to spin up ephemeral or persistent environments on demand, at a fraction of the cost of dedicated clusters.

5. Real-World Scenarios

Across use cases, vCluster simplifies how teams manage Kubernetes at scale:

- Platform engineering: isolate teams or projects in their own vClusters

- SaaS providers: assign a vCluster per customer for strong separation

- Development pipelines: create ephemeral clusters for testing and integration

- Bare metal and GPU clusters: securely share hardware without losing workload control

This model brings flexibility without fragmentation, giving developers full control within their boundaries while maintaining centralized governance at the platform level.

To see exactly how vCluster achieves this critical layer of separation and addresses the most common multi-tenancy pitfalls, read the full breakdown here.

Key Benefits of Multi-Tenancy with vCluster

vCluster offers a new way to manage Kubernetes multi-tenancy, one that combines strong isolation, efficient resource sharing, and simplified operations.

By running virtual Kubernetes control planes inside a single host cluster, platform teams can give every tenant full autonomy while keeping costs and complexity under control.

The following key benefits, highlighted across vCluster’s architecture and use cases, make it a practical foundation for modern multi-tenant platforms.

1. True Control Plane Isolation

Unlike namespace-based setups, where tenants share a single API server and scheduler, vCluster gives each tenant a fully isolated control plane.

This prevents CRD or controller conflicts and ensures that one tenant’s configuration changes never impact others.

Each vCluster behaves like an independent Kubernetes cluster, allowing teams or customers to define custom policies, install CRDs, and operate safely within their own environment.

2. Shared Infrastructure Efficiency

All vClusters run within the same host cluster and share its nodes, network, and storage.

This means infrastructure resources are used efficiently without compromising boundaries between tenants.

Platform teams avoid the high overhead of managing dozens or hundreds of separate clusters while maintaining strong logical separation.

This shared model is particularly effective in large-scale setups such as SaaS platforms or internal developer portals, where each tenant can have their own environment without inflating costs.

3. Full Kubernetes Compatibility

Every vCluster runs a standard Kubernetes distribution, meaning tenants can use kubectl, Helm, and existing automation pipelines without modification.

Workflows, manifests, and deployment practices remain consistent, whether teams are using a dedicated cluster or a vCluster.

This compatibility eliminates friction and ensures developers can work in familiar environments while platform teams maintain unified governance underneath.

4. Secure and Autonomous Tenancy

With their own control plane, tenants gain autonomy, they can manage RBAC, namespaces, controllers, and deployments independently.

At the same time, security boundaries remain intact, since cross-tenant access is prevented at the control-plane level.

Platform administrators can define global policies from the host cluster while allowing teams full control inside their vClusters.

5. Reduced Operational Overhead

vCluster simplifies cluster lifecycle management.

New clusters can be created in seconds, updated consistently, and destroyed cleanly without affecting the host cluster or other tenants.

This eliminates repetitive maintenance tasks associated with traditional multi-cluster management.

Platform teams can focus on governance and automation instead of cluster creation and upgrades, making large-scale Kubernetes environments more sustainable.

6. Improved Performance Isolation

In multi-tenant environments, “noisy neighbor” effects are a major challenge.

vCluster reduces this risk by separating tenant control planes, meaning each tenant’s workloads are managed independently.

Even when running heavy or specialized workloads, such as GPU-based ML jobs, vCluster helps maintain performance stability by isolating scheduling and resource management logic.

7. Centralized Governance and Visibility

Even though each vCluster operates independently, all are managed through a single host cluster.

This allows platform teams to apply unified policies, monitor usage, and manage cost allocation at scale.

Integrations with standard observability tools provide visibility into performance and resource consumption across tenants, without breaking isolation.

8. Cost Efficiency at Scale

By combining shared infrastructure with virtualized control planes, vCluster delivers the isolation benefits of dedicated clusters without their cost.

Clusters can be spun up quickly, used temporarily (for CI/CD or testing), and removed when no longer needed, ensuring resources are only consumed when required.

This model provides both financial and operational scalability, critical for modern Kubernetes platforms where efficiency directly affects velocity.

vCluster gives organizations a practical way to scale Kubernetes across teams, projects, or customers without the usual trade-offs.

It achieves this by unifying three goals once thought incompatible:

- Isolation, every tenant has their own control plane

- Efficiency, workloads share underlying infrastructure

- Autonomy, teams work freely within defined boundaries

In short, vCluster turns Kubernetes into a truly multi-tenant platform, secure, efficient, and built for scale.

Common Multi-Tenant Architectures with vCluster

vCluster supports a wide range of architectures designed to balance isolation, scalability, and infrastructure efficiency.

Whether teams are developing software, operating SaaS platforms, or managing GPU-intensive workloads, vCluster provides the flexibility to adapt Kubernetes multi-tenancy to different operational models.

Below are the most common architectures described across vCluster’s platform use cases.

1. Team-Based Environments

One of the simplest and most popular approaches is team-based tenancy.

Each engineering team, business unit, or internal project receives its own vCluster within a shared host cluster.

This allows teams to experiment, deploy, and manage workloads independently, without risking conflicts or requiring separate clusters.

Because each team’s vCluster comes with its own control plane, teams can install CRDs, operators, and custom controllers without impacting others.

Meanwhile, the platform team maintains governance and visibility through the shared host cluster.

Used for:

Development and staging environments, internal DevOps platforms, and self-service infrastructure for engineering teams.

2. SaaS Customer Isolation

For SaaS providers, strong tenant boundaries are a core requirement.

vCluster makes it possible to assign each customer their own Kubernetes control plane, delivering true isolation for customer workloads, data, and configurations while keeping infrastructure shared and efficient.

This approach combines the security benefits of dedicated clusters with the scalability of a shared platform.

It also simplifies compliance, as customer environments can be governed and monitored independently without adding management overhead.

Used for:

Multi-tenant SaaS applications, customer-specific environments, and platforms requiring regulatory compliance or data separation.

3. CI/CD and Ephemeral Environments

vCluster’s fast provisioning capabilities make it ideal for CI/CD pipelines and ephemeral clusters.

Clusters can be created in seconds for each build, pull request, or integration test, and automatically destroyed after use.

Because vClusters are lightweight and isolated, they prevent conflicts between concurrent builds and ensure clean, reproducible environments every time.

This model reduces cost and improves pipeline reliability by providing true environment independence for continuous testing and deployment.

Used for:

Automated testing, on-demand preview environments, and pipeline-based infrastructure provisioning.

4. Shared Platform Stack

Many organizations adopt a shared platform stack architecture, where core services such as monitoring, ingress, and logging run in the host cluster, while workloads and controllers operate inside individual vClusters.

This design creates a clean separation of responsibilities:

- The host cluster provides centralized infrastructure components (e.g., Prometheus, Grafana, ingress gateways).

- Each vCluster hosts application-specific logic, workloads, and team-managed configurations.

It allows teams to innovate freely without duplicating shared services, combining autonomy for developers with operational simplicity for platform teams.

Used for:

Internal platform engineering setups, centralized DevOps toolchains, and hybrid infrastructure environments.

5. Bare Metal and GPU Workloads

In environments running on bare metal with GPUs, vCluster helps solve one of the hardest problems in Kubernetes multi-tenancy: safely sharing hardware acceleration resources.

Traditional namespace isolation cannot guarantee GPU security or workload performance when multiple tenants share the same cluster.

By isolating control planes, vCluster allows GPU workloads to run independently while sharing underlying hardware efficiently.

This ensures predictable performance, simplified scheduling, and clean separation between AI or ML workloads.

Used for:

Machine learning, AI, and data processing pipelines requiring GPU access across multiple teams or tenants.

Each of these architectures demonstrates how vCluster enables customizable multi-tenancy:

- Lightweight and fast for short-lived environments

- Secure and isolated for regulated or customer-facing workloads

- Scalable and centralized for large internal platforms

By aligning control-plane isolation with shared infrastructure, vCluster gives platform teams a consistent foundation to run any mix of tenants, from developers to enterprise customers, on one unified Kubernetes ecosystem.

How vCluster Solves What Others Don’t

Every Kubernetes multi-tenancy model comes with trade-offs.

Namespace-based setups are lightweight but risky; dedicated clusters offer strong isolation but are expensive and complex to manage.

vCluster eliminates these trade-offs by providing control-plane isolation on shared infrastructure, bringing together the best aspects of both approaches.

1. Control Plane Isolation Without Cluster Sprawl

One of the biggest challenges in Kubernetes multi-tenancy is the shared control plane.

In namespace-based clusters, tenants all rely on the same API server and controllers, which means CRD conflicts, admission webhooks, or misconfigured operators can affect everyone.

vCluster changes that.

Each tenant gets a fully functional control plane, their own API server, scheduler, and controllers, running inside the host cluster.

From the tenant’s point of view, it behaves exactly like a dedicated cluster, but the infrastructure is shared underneath.

This isolates cluster logic while keeping infrastructure simple and cost-effective.

2. Safe Use of Custom Resources and Controllers

Traditional namespace models make it risky for tenants to install CRDs or custom controllers because they’re cluster-scoped and can interfere with others.

With vCluster, each virtual cluster can safely extend its own Kubernetes API.

Tenants can register CRDs, deploy admission webhooks, and run controllers without affecting anyone else in the host cluster.

This is especially valuable for platform teams supporting multiple development groups or customers who each need to manage their own operators or integrations independently.

3. Simplified Multi-Tenant Operations

In dedicated-cluster models, managing upgrades, monitoring, and policy enforcement across dozens or hundreds of clusters creates operational overhead.

vCluster centralizes this by running multiple virtual clusters inside a single host cluster.

Platform teams can:

- Apply global security and resource policies once

- Monitor all vClusters from the host environment

- Automate provisioning, scaling, and deletion consistently

This dramatically simplifies multi-tenant management and eliminates repetitive cluster maintenance work.

4. Performance and Resource Efficiency

Because vClusters share the host cluster’s nodes, they avoid the resource duplication common in dedicated-cluster setups.

At the same time, each control plane operates independently, reducing the “noisy neighbor” effects that occur in namespace-only environments.

vCluster allows organizations to optimize hardware utilization while maintaining reliable performance for all tenants, especially important in large-scale or resource-sensitive workloads.

5. Unified Governance with Tenant Autonomy

One of the recurring challenges in multi-tenancy is balancing autonomy for teams with control for platform admins.

vCluster makes this balance possible.

Tenants have full control within their vCluster, managing RBAC, workloads, and CRDs freely, while administrators maintain centralized oversight and governance from the host cluster.

This model ensures consistency in compliance and security without restricting developer velocity.

6. Solving the Core Trade-Off

vCluster redefines Kubernetes multi-tenancy by introducing a model that isolates tenants where it matters most, in the control plane, while keeping compute, storage, and networking shared for efficiency.

It delivers the autonomy, stability, and scalability that namespaces and dedicated clusters could never achieve together.

In short:

Namespaces share too much. Dedicated clusters cost too much. vCluster balances both.

Best Practices for Designing Multi-Tenant Platforms

Designing a multi-tenant platform is about finding the right balance between autonomy, security, and operational control.

Kubernetes provides the building blocks, but how those blocks are combined determines whether a platform scales smoothly or becomes fragile over time.

The following best practices, drawn from vCluster’s published guidance, outline how to build secure, efficient, and maintainable multi-tenant systems.

1. Choose the Right Isolation Level

Every use case demands a different level of isolation.

Before implementing multi-tenancy, platform teams should define the type of boundaries each tenant requires.

- Namespaces work for internal or low-risk tenants where collaboration outweighs strict separation.

- Dedicated clusters provide the highest isolation for regulated workloads but add heavy management overhead.

- vClusters offer the middle ground, strong logical isolation with shared infrastructure efficiency.

Matching the isolation level to the use case prevents over-engineering and ensures the right trade-off between cost and security.

2. Enforce Resource Quotas and Limits

In shared environments, resource fairness is critical.

Applying ResourceQuotas and LimitRanges helps prevent any tenant from consuming more CPU, memory, or storage than allocated.

For vCluster-based setups, quotas can be defined at both the host and virtual cluster levels, ensuring predictable performance and stable capacity planning.

Consistent quota policies also make cost allocation and chargeback models more transparent.

3. Strengthen Access Controls with RBAC

A strong Role-Based Access Control (RBAC) model is central to multi-tenant security.

Each tenant should have permissions scoped to their environment, while administrative control over cluster-level settings should remain centralized.

For vCluster environments, RBAC can be defined both:

- Within each virtual cluster for tenant-level access control

- At the host level to manage who can create or modify vClusters

This two-layer model ensures autonomy for tenants and stability for platform administrators.

4. Implement Network and Security Policies

Network boundaries are as important as RBAC boundaries.

Using NetworkPolicies, teams can restrict communication between namespaces or vClusters to prevent accidental or unauthorized cross-tenant access.

For added security, integrate admission controllers or policy engines to enforce mandatory network isolation rules across all tenants.

A combination of RBAC and NetworkPolicies helps maintain a strong zero-trust posture inside the shared platform.

5. Centralize Logging, Monitoring, and Alerting

Multi-tenant visibility depends on consistent observability.

Logs, metrics, and traces should be collected across all tenants and aggregated in a centralized monitoring stack such as Prometheus, Grafana, and Loki.

Each tenant can access metrics relevant to their workloads, while platform administrators maintain a global view of usage, performance, and health.

This approach simplifies troubleshooting, improves accountability, and strengthens audit compliance.

6. Automate Cluster and Tenant Lifecycle Management

Manual cluster management doesn’t scale.

Automating provisioning, updates, and teardown ensures consistency and reduces human error.

vCluster supports declarative creation via GitOps tools like Argo CD or Flux, allowing teams to define vClusters and their configurations as code.

This makes it easy to:

- Provision environments on demand

- Apply versioned configuration updates

- Retire unused environments automatically

Automation transforms multi-tenancy from an operational burden into a repeatable, scalable process.

7. Define Clear Governance and Policy Frameworks

As the number of tenants grows, governance frameworks become essential.

Use admission policies, OPA Gatekeeper, or Kyverno to define and enforce global rules, such as allowed container images, label standards, or resource constraints.

This ensures consistency across all tenants and helps prevent configuration drift.

The key is to standardize governance without restricting innovation, policies should enable safety, not bureaucracy.

8. Prioritize Secure Configuration Management

Isolating tenant configurations reduces risk.

Each vCluster should maintain its own configuration space, secrets, and service accounts, while the host cluster manages only the shared infrastructure components.

Avoid sharing credentials or secrets between tenants, and apply encryption at rest and in transit for all sensitive data.

This approach ensures that each tenant operates securely, even when using the same physical infrastructure.

9. Regularly Audit and Test Multi-Tenant Boundaries

Security in multi-tenancy is not a one-time setup, it’s a continuous process.

Regular audits of RBAC roles, network policies, and resource quotas help identify weak spots early.

Penetration testing and tenant-level policy reviews ensure that isolation mechanisms continue to function as intended.

Routine validation keeps the platform aligned with both internal compliance standards and external regulatory requirements.

10. Balance Freedom and Oversight

The most successful multi-tenant platforms give tenants the freedom to innovate while maintaining consistent oversight.

With vCluster, platform teams can enforce global rules from the host cluster while tenants operate freely within their own control planes.

This balance drives faster development cycles without compromising governance, performance, or stability.

Building a secure and scalable multi-tenant platform requires intentional design.

By combining the right isolation model with automation, governance, and continuous observability, platform teams can create an environment that’s efficient for operations and safe for every tenant.

For the platform team, the goal of multi-tenancy is simple: empower teams to move fast, safely, consistently, and at scale. This mission is put to the ultimate test when managing expensive, specialized resources like GPUs and bare-metal servers.

For a detailed walkthrough on how to overcome the specific challenges of multi-tenancy on GPU infrastructure, read the bare-metal blog post.

Conclusion

Multi-tenancy has become essential to how modern organizations run Kubernetes at scale.

From the early days of physical separation and virtual machines to the rise of namespaces and dedicated clusters, every stage of this evolution has aimed to solve the same challenge, how to share infrastructure safely, efficiently, and predictably.

The answer lies in control-plane isolation on shared infrastructure.

By introducing this layer of separation, vCluster removes the long-standing trade-offs between cost, complexity, and autonomy.

It allows platform teams to operate a single, efficient host cluster while giving every tenant, whether a developer, team, or customer, a fully independent Kubernetes experience.

vCluster turns Kubernetes into a truly multi-tenant platform:

secure, scalable, and optimized for both collaboration and control.

It enables faster environment creation, simplified governance, and stronger isolation, all without sacrificing performance or operational efficiency.

As organizations continue to grow, the goal of multi-tenancy remains the same:

empower every tenant to move fast, stay secure, and scale without friction.

With vCluster, that balance is no longer a trade-off, it’s built in.

Frequently Asked Questions

What is Kubernetes multi-tenancy?

Kubernetes multi-tenancy allows multiple independent users or teams (tenants) to share the same Kubernetes infrastructure while maintaining logical isolation. This approach enables better resource efficiency, consistent security and governance, and faster environment provisioning without requiring dedicated clusters for each tenant.

What are the main challenges with Kubernetes multi-tenancy?

The primary challenges include weak control plane isolation, security and access management issues, resource contention between tenants, operational complexity as tenant numbers grow, and the trade-off between cost efficiency and security. Traditional namespace-based approaches often lack strong isolation, while dedicated clusters per tenant create management overhead and high costs.

What's the difference between namespaces and virtual clusters?

Namespaces provide basic logical separation within a single Kubernetes cluster but share the same control plane, API server, and cluster-wide resources. Virtual clusters give each tenant their own Kubernetes control plane (API server, scheduler, controllers) while sharing underlying infrastructure, providing stronger isolation without the overhead of managing separate physical clusters.

How does vCluster solve multi-tenancy problems?

vCluster provides control plane isolation on shared infrastructure. Each tenant gets a fully functional virtual control plane running inside a host cluster, allowing them to install CRDs, custom controllers, and manage configurations independently. This eliminates CRD conflicts and cross-tenant interference while maintaining resource efficiency through shared nodes and networking.

Can multiple tenants safely share GPU resources in Kubernetes?

Yes, with proper isolation mechanisms like virtual clusters. vCluster enables GPU workloads to run independently while sharing underlying hardware efficiently. By isolating control planes, it ensures predictable performance, simplified scheduling, and clean separation between AI or ML workloads without compromising security or performance.

What's the best multi-tenancy model for SaaS platforms?

Virtual clusters are ideal for SaaS platforms because they provide strong tenant isolation (each customer gets their own control plane) while keeping infrastructure shared and cost-efficient. This approach combines the security benefits of dedicated clusters with the scalability of a shared platform, simplifying compliance and customer environment management.

What security measures are needed for multi-tenant Kubernetes?

Essential security measures include strong RBAC policies scoped per tenant, network policies to restrict cross-tenant communication, resource quotas to prevent abuse, control plane isolation (ideally through virtual clusters), regular audits of access controls, admission policies for governance, and encrypted secrets management per tenant.

What's the difference between virtual cluster and dedicated clusters?

Dedicated clusters give each tenant a completely separate Kubernetes environment with isolated nodes, networking, and control plane, but require heavy management and high infrastructure costs. Virtual Cluster provides similar control plane isolation and tenant autonomy but shares the underlying infrastructure (nodes, network, storage), dramatically reducing operational complexity and cost while maintaining strong boundaries.

How many virtual clusters can run on a single host cluster?

The number depends on your host cluster capacity and workload requirements. vCluster is designed to scale efficiently—some organizations run hundreds of virtual clusters on a single host. Because virtual clusters share infrastructure and only virtualize the control plane, they're much more resource-efficient than running separate physical clusters.

Is multi-tenancy suitable for production workloads?

Yes, when implemented with proper isolation mechanisms. Virtual clusters provide production-grade multi-tenancy by isolating control planes while maintaining performance and security. Many organizations run production SaaS platforms, internal developer environments, and mission-critical workloads using virtual cluster architecture with strict governance and monitoring in place.

What happens if one tenant's workload fails in a multi-tenant setup?

With proper control plane isolation (like vCluster), tenant failures are contained within their virtual cluster. A misconfigured CRD, broken controller, or workload crash in one tenant won't affect others because each has an independent control plane. The shared infrastructure remains stable, and only the affected tenant experiences issues.

How does multi-tenancy work with Kubernetes RBAC?

In virtual cluster setups, RBAC operates at two levels: within each virtual cluster for tenant-level access control, and at the host cluster level to manage who can create or modify virtual clusters. This two-layer model ensures tenant autonomy (they control access within their environment) while platform administrators maintain governance over the overall infrastructure.

References:

🔗 https://www.vcluster.com/blog/kubernetes-multi-tenancy-10-essential-considerations

🔗 https://www.vcluster.com/blog/understanding-kubernetes-multi-tenancy-models-challenges-and-solutions

🔗 https://www.vcluster.com/blog/multi-tenancy-in-2025-and-beyond

🔗 https://www.vcluster.com/blog/kubernetes-multi-tenancy-are-you-doing-it-the-hard-way

🔗 https://www.vcluster.com/blog/comparing-multi-tenancy-options-in-kubernetes

🔗 https://www.vcluster.com/blog/vcluster-shared-platform-stack

🔗 https://www.vcluster.com/blog/kubernetes-multi-tenancy-vcluster

🔗 https://www.vcluster.com/blog/bare-metal-kubernetes-with-gpu-challenges-and-multi-tenancy-solutions

Deploy your first virtual cluster today.