The Journey to Runtime Isolation for HDO with vCluster

In today’s fast-paced tech landscape, continuous innovation is crucial for organizations to maintain their competitive edge. The performance of a company hinges on the capabilities of its team members. To ensure we onboard skilled candidates, we devised a Tech Assessment Tool for hiring DevOps staff called HDO, which mimics real-world environments by encapsulating candidates in tasks that they have to solve.

This article details how integrating vCluster technology into HDO has revamped task creation and candidate experience. The tasks are designed to evaluate candidates’ technical skills, problem-solving abilities, and familiarity with DevOps practices.

Our adventure began with an in-depth exploration of the tools utilized by market leaders for Infrastructure and DevOps assessments. We discovered a gap in the support for multi-node Kubernetes tasks in task assessments. This revelation inspired us to create a solution that would surpass this limitation.

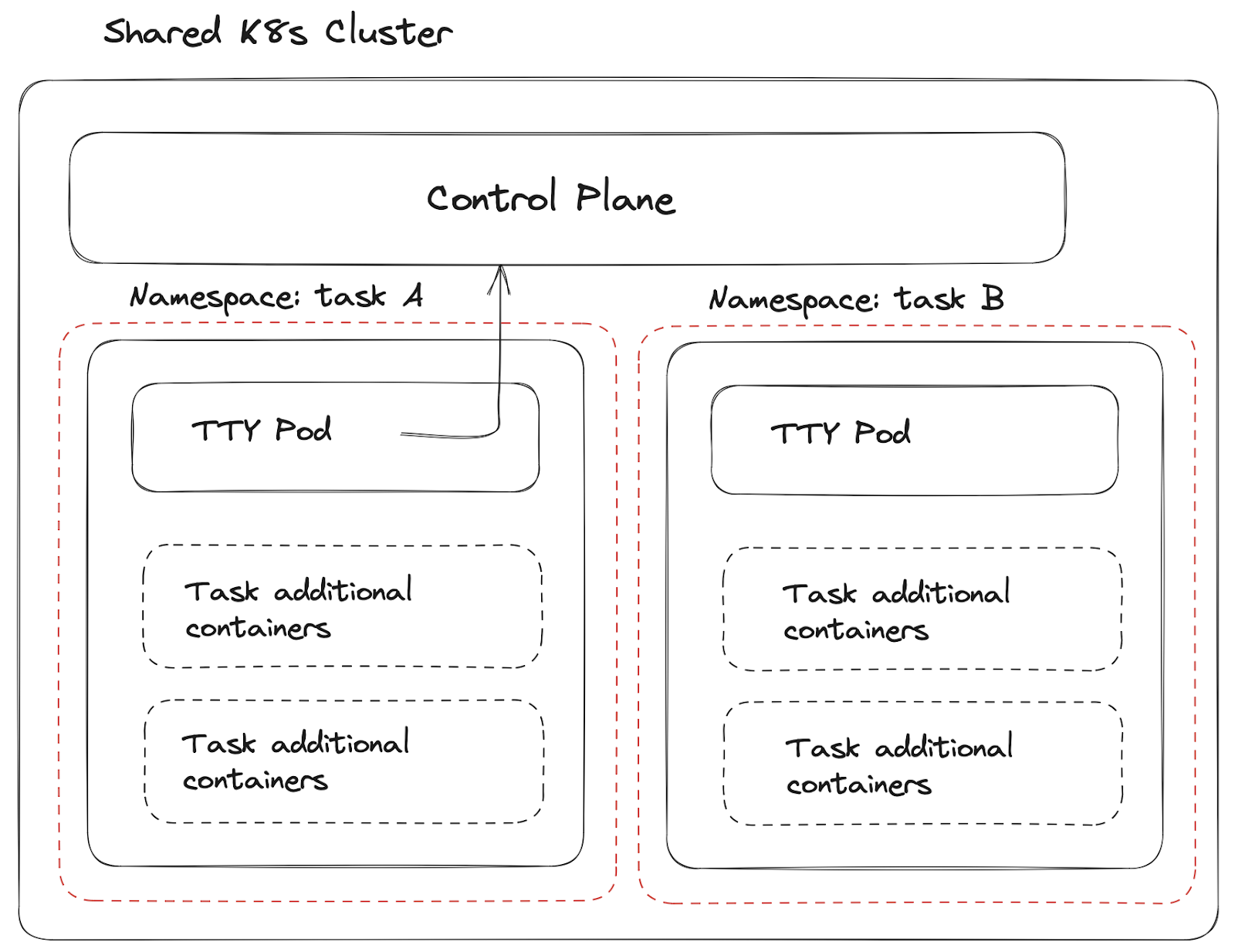

To tackle the limitation of a single node Virtual Machine (VM) for tasks requiring multi-node k8s, we designed a system that isolates each task within its namespace in a shared Kubernetes cluster.

Despite our initial success with a shared Kubernetes setup, we soon confronted a series of challenges. Our commitment to empowering task creators with the freedom to deploy any dependency within the task namespace began to interfere with our ability to control anti-affinity or node selectors. Furthermore, managing Persistent Volumes (PV) and Persistent Volume Claims (PVC) permissions within this structure amplified the complexity, necessitating a more elegant solution.

We then began exploring ways to mutate, and limit user abilities since built in RBAC couldn’t provide us enough flexibility. Our first choice was to add Gatekeeper to restrict objects creation without proper anti-affinity defined.

While Gatekeeper excelled in policy control, it couldn't isolate access control to the shared resources such as nodes, ingress controller, CSI drivers.

As we were weighing the pros and cons of Gatekeeper, we discovered vCluster, an innovative technology that offered a novel approach to our problem. The prospect of leveraging vCluster to create isolated environments for our HDO tasks was intriguing, especially considering its potential to overcome the limitations we had encountered with Gatekeeper.

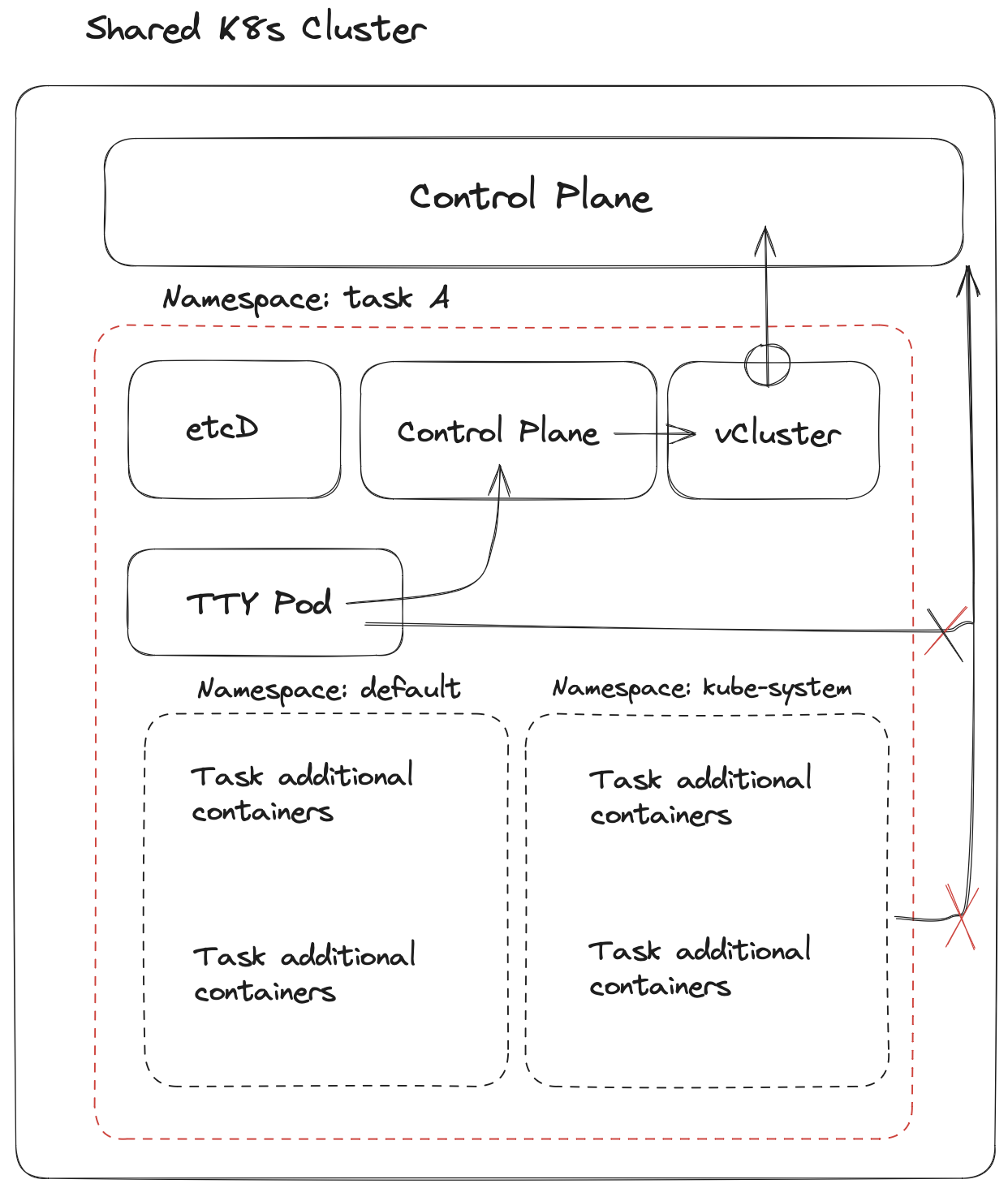

vCluster dramatically improved our approach to runtime isolation. It allowed us to deploy a full-scale Kubernetes control plane with etcd and a k8s API within the same namespace, ensuring total isolation for resources, network, and access.

The vCluster controller serves as a bridge between the task-specific k8s API and the shared cluster API, mutating objects and keeping them in sync. This approach solved our resource allocation issue and provided network isolation.

vCluster also unveiled several unexpected benefits:

vCluster’s isolation capabilities allow for the safe and effective deployment of cluster-wide resources, such as controllers like Istio or ElasticSearch. This gives task creators the freedom to add complex dependencies and resources to their tasks, enhancing their capabilities and the real-world applicability of the tasks.

Additionally, vCluster allows for the safe provisioning of Persistent Volumes (PV) and Persistent Volume Claims (PVC), a crucial feature when dealing with stateful applications within the tasks.

One critical metric for us is balancing the boot time of an environment against cluster costs. Provisioning a cluster for each canditate would be expensive and slow. Making candidates wait longer than necessary for the assignments to boot, in order to start thinking about how to approach the tasks, would negatively affect the UX experience of the HDO platform. By using vCluster, this problem got resolved.

The introduction of vCluster added an additional two minutes needed for etcd and the Kubernetes API to start in a new task namespace.

vCluster: |--|-etcd+k8s--|--CoreDNS--|

0 3 5 6 9 10 15

Task payload: |--|--tty--|--custom init--|

Node provision: |--| |--|

Total time for provisioning a task took up to 15 minutes.

To enhance the candidate experience, we worked on reducing the time to boot an environment.

For that we introduced two ASG pools and for each of them we used WarmPool.

Key benefits from this split was that vCluster triggers dedicated ASG autoscaling in parallel with the main pool allocated for the task resources that guarantee that candidates can't influence vCluster performance and improve boot time.

Using WarmPool for ASG reduced node join time from three minutes to less than one minute.

vCluster: |--|-etcd+k8s--|--CoreDNS--|

0 1 3 4

Task payload: |----|-tty--|----custom init----|

1 2 3 8

Node provision: |--|

ARM Node provision: |--|

Total time for provisioning a task was about eight minutes.

After implementing vCluster in the HDO’s K8s environment, the advantages for candidates became evident. One of the standout features of vCluster is its ability to create isolated virtual clusters. Within the main Kubernetes cluster, vCluster enables candidates to experiment and deploy custom resources, controllers, and services without influencing the parent environment. This freedom empowers candidates to utilize Kubernetes custom resources and controllers such as Istio or the Elasticsearch controller, thereby enriching the depth and realism of the tasks. This means that even as candidates get the freedom to experiment and innovate, they do so without risking the stability or security of the main Kubernetes environment.

The journey to achieving runtime isolation for HDO with vCluster has been a transformative experience for our team. The integration of vCluster technology into the HDO product has elevated the capabilities of task creators and enhanced the evaluation of DevOps candidates' technical skills and problem-solving abilities.

Our earlier shared Kubernetes setup restricted our control over task creation, leading to the "noisy neighbor" issue. With vCluster's unique approach to runtime isolation, we were able to deploy a complete Kubernetes control plane with etcd and a k8s API within the same namespace, ensuring total isolation for resources and network. Furthermore, vCluster eliminated the confusion candidates often experienced due to non-default namespaces and restricted access, allowing them to focus solely on their tasks and boosting task resolution efficiency.

This innovative solution's capacity to provide robust runtime isolation has significantly uplifted the quality and authenticity of our technical assessments. As a result, we can confidently onboard highly skilled candidates ready to excel in dynamic technology environments. Our experience with vCluster has been overwhelmingly positive, paving the way for future innovations.

To learn more about vCluster:

Deploy your first virtual cluster today.