When 37% of Cloud Environments Are Vulnerable, "Just Use VMs" Isn't Good Enough

A three-line Dockerfile broke container security.

CVE-2025-23266—a critical vulnerability in the NVIDIA Container Toolkit, exposed 37% of cloud environments running AI workloads. Three lines of malicious code in a Docker image, and an attacker gets full root access to your Kubernetes node.

Not the container. The entire node.

This isn't theoretical. Wiz Research published the exploit. It's out there. And it bypassed traditional container isolation like it wasn't even there.

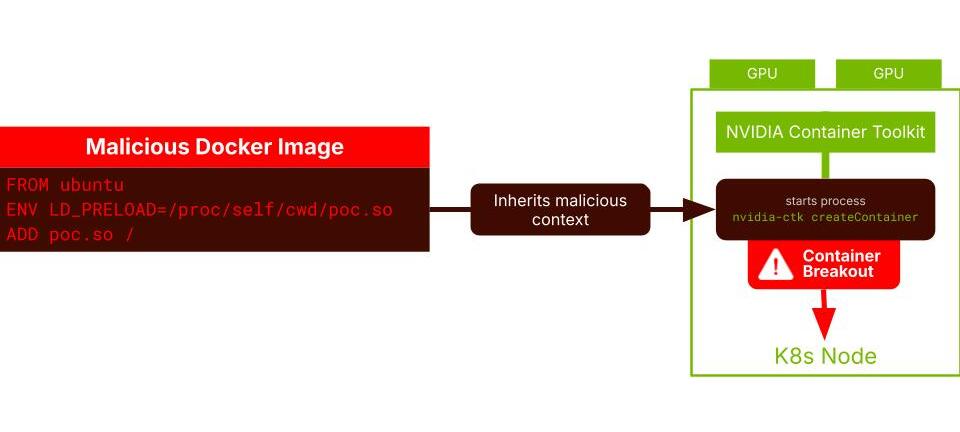

Here's what happened with the NVIDIA vulnerability:

A malicious Docker image sets an environment variable (LD_PRELOAD) that loads a compromised library during container initialization. The NVIDIA Container Toolkit—which needs privileged access to expose GPUs to containers—inherits this malicious context before the container even starts.

Result? Full container breakout. Root access to the Kubernetes node. Game over.

The fundamental issue isn't NVIDIA-specific. It's that containers share the host kernel. When you run as root inside a container, you're root on that kernel. And when critical processes like the NVIDIA toolkit run in that shared context, a single misconfiguration or exploit can compromise everything.

The security playbook says: if containers are too risky, use VMs.

VMs give you separate kernels. True isolation. An exploit in one VM can't touch another because each has its own kernel boundary.

Sounds perfect. Until you try to actually run AI infrastructure with VMs.

VMs don't work for:

VMs solve the security problem by making the operational problem worse.

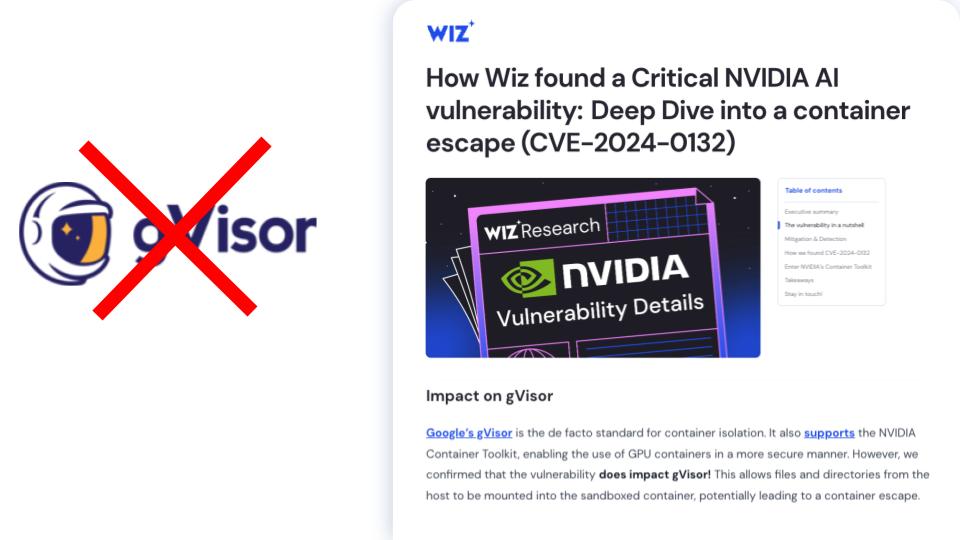

Google's gVisor has been the go-to answer for hardening containers. It intercepts system calls and filters what containers can do at the kernel level.

It works. Kind of.

But here's what Wiz Research confirmed: the NVIDIA vulnerability would have bypassed gVisor entirely. Why? Because the exploit happens during container initialization—before gVisor's system call filtering even activates.

Beyond that, gVisor has practical limitations:

gVisor raises the bar. But not high enough.

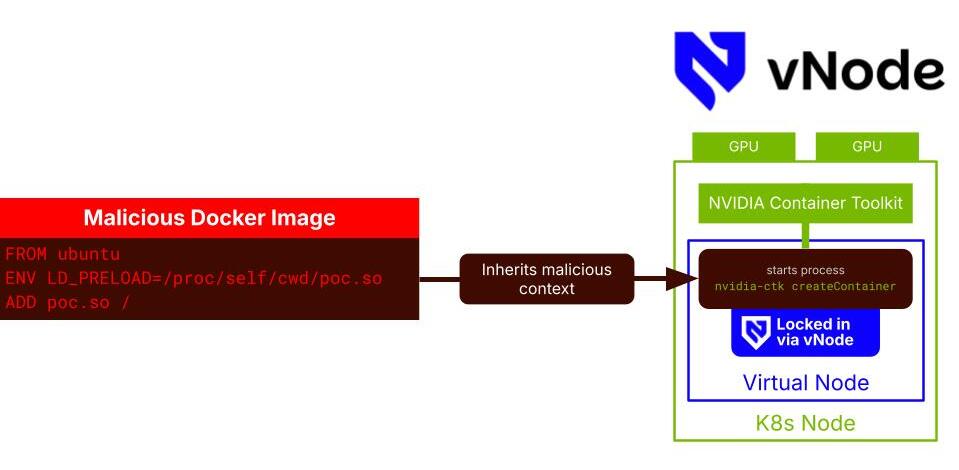

What if you could get VM-level isolation without actually using VMs?

That's what vNode does.

Instead of wrapping every container in a heavy VM or aggressively filtering system calls, vNode creates a lightweight isolation boundary around the entire container launch process—including the runtime, the container daemon, and any privileged tooling like the NVIDIA Container Toolkit.

Here's how it works:

When a pod with the vNode runtime class lands on your Kubernetes node, vNode spawns a hardened container—a "virtual node"—and runs the entire container lifecycle inside it. The malicious Docker image? It runs inside your container, which runs inside the vNode container.

If an attacker breaks out of their container, they land in the virtual node. Not your actual Kubernetes node.

And the virtual node gives them nothing:

The NVIDIA exploit? Completely contained. The attacker breaks out of their container and realizes they're just inside another container—one they can't escape.

vNode doesn't compromise. You get strong isolation without operational complexity.

vNode works even better when combined with vCluster.

Here's why: if you're running multiple virtual Kubernetes clusters (vClusters) on the same physical nodes, you can group the pods from each vCluster into a single virtual node per physical node.

Without vCluster: Every pod gets wrapped in its own vNode container. More overhead.

With vCluster: Pods from vCluster-a share one vNode. Pods from vCluster-b share another. Less overhead, same security.

You're isolating at the tenant level, not the pod level, which is more efficient and still gives you strong boundaries between workloads.

vNode is a Helm chart. Install it to your cluster, label your pods with the vNode runtime class, and you're done.

From the user's perspective, nothing changes. kubectl get pods looks the same. Port forwarding works. Logs work. Everything is transparent—except your workloads are now running inside hardened virtual nodes.

Best for:

Container security has been stuck between two bad options: accept the shared kernel risk or deal with the operational overhead of VMs.

vNode gives you a third option—container-native isolation that's as strong as VMs but as lightweight and flexible as containers.

When 37% of cloud environments are vulnerable to a three-line Dockerfile exploit, you need more than hope and best practices.

You need actual isolation.

Want to see it in action? Reach out to our team for a demo or start with the open-source project today.

Deploy your first virtual cluster today.