Why a Tenancy Layer Belongs in the Kubernetes Tech Stack (in 2026)

As Kubernetes adoption accelerates, platform teams face a familiar tension: how do you give developers safe, isolated, self-service environments without creating a sprawl of physical clusters that are expensive to operate and painful to maintain?

In 2026, this tension is no longer just about developer experience or cost optimization, it’s being pushed to a breaking point by AI workloads and GPU infrastructure.

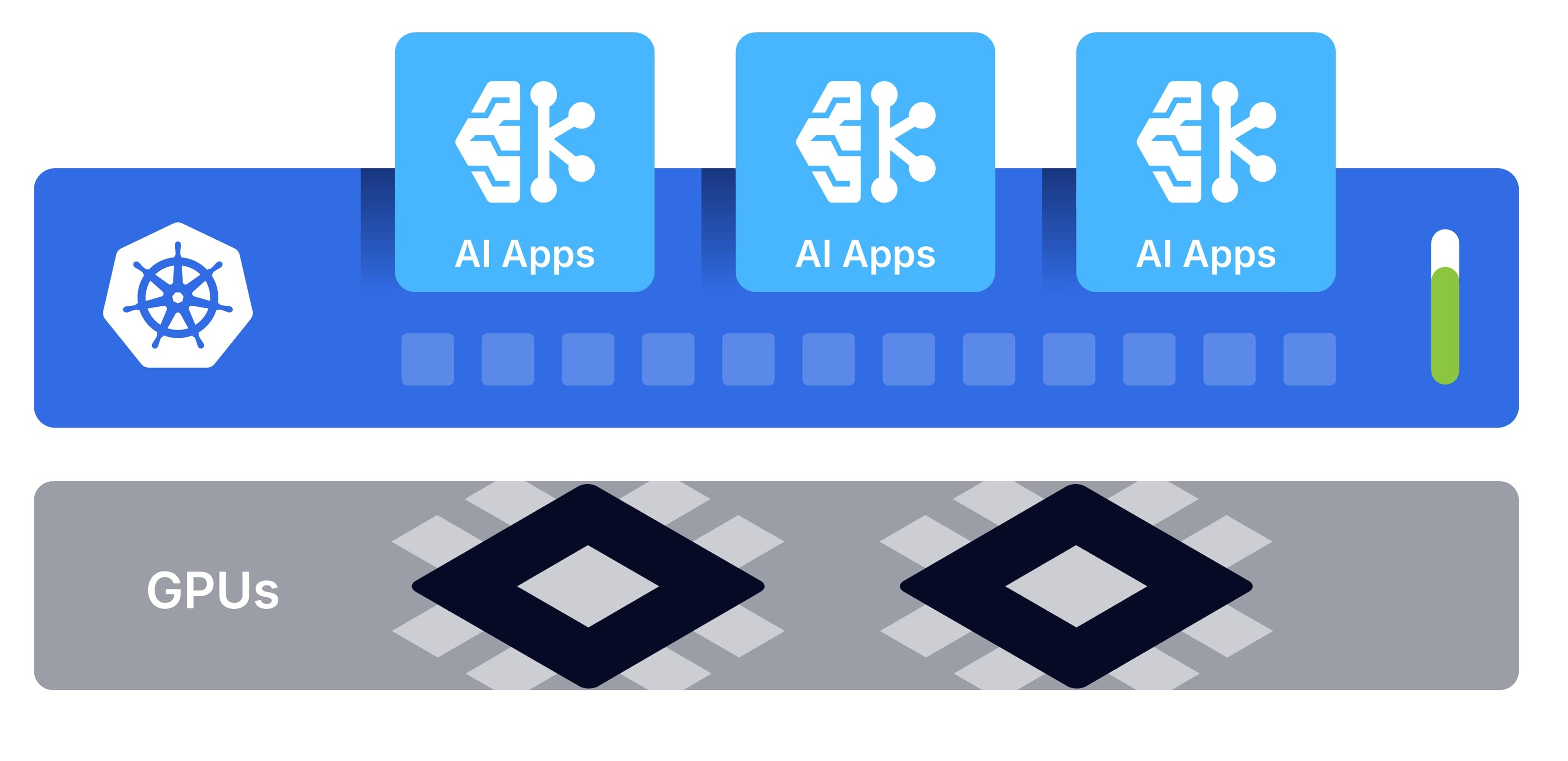

Kubernetes is now the control plane for AI training pipelines, GPU-backed inference services, ephemeral preview environments, and internal platforms serving dozens or hundreds of teams. GPU nodes are expensive, scarce, and highly specialized. AI teams need fast, isolated access to them, often temporarily, often at scale, and often with custom schedulers, drivers, or runtimes.

Traditional Kubernetes models struggle under the pressure of AI workloads on GPU infrastructure.

GPU nodes are expensive, scarce, and highly specialized. AI teams demand fast, isolated access to them at scale, and often with custom schedulers, drivers, or runtimes. Trying to meet these demands with traditional Kubernetes patterns quickly exposes painful trade-offs:

These issues are forcing platform teams to rethink how Kubernetes environments are created, isolated, and governed.

Most Kubernetes platforms today are still operating with assumptions that made sense years ago:

AI workloads break all of these assumptions.

Trying to solve this with namespaces alone leads to fragile RBAC, shared blast radius, and security risk. Solving it with dedicated clusters leads to GPU fragmentation, idle capacity, and unsustainable operational overhead.

A modern Kubernetes platform introduces a Tenancy Layer: a layer esponsible for isolating, organizing, and governing workloads, especially in GPU- and AI-heavy systems.

This layer sits between your Networking/Storage foundation and your Observability, Security, and Operations tooling, and it fundamentally changes how platforms scale:

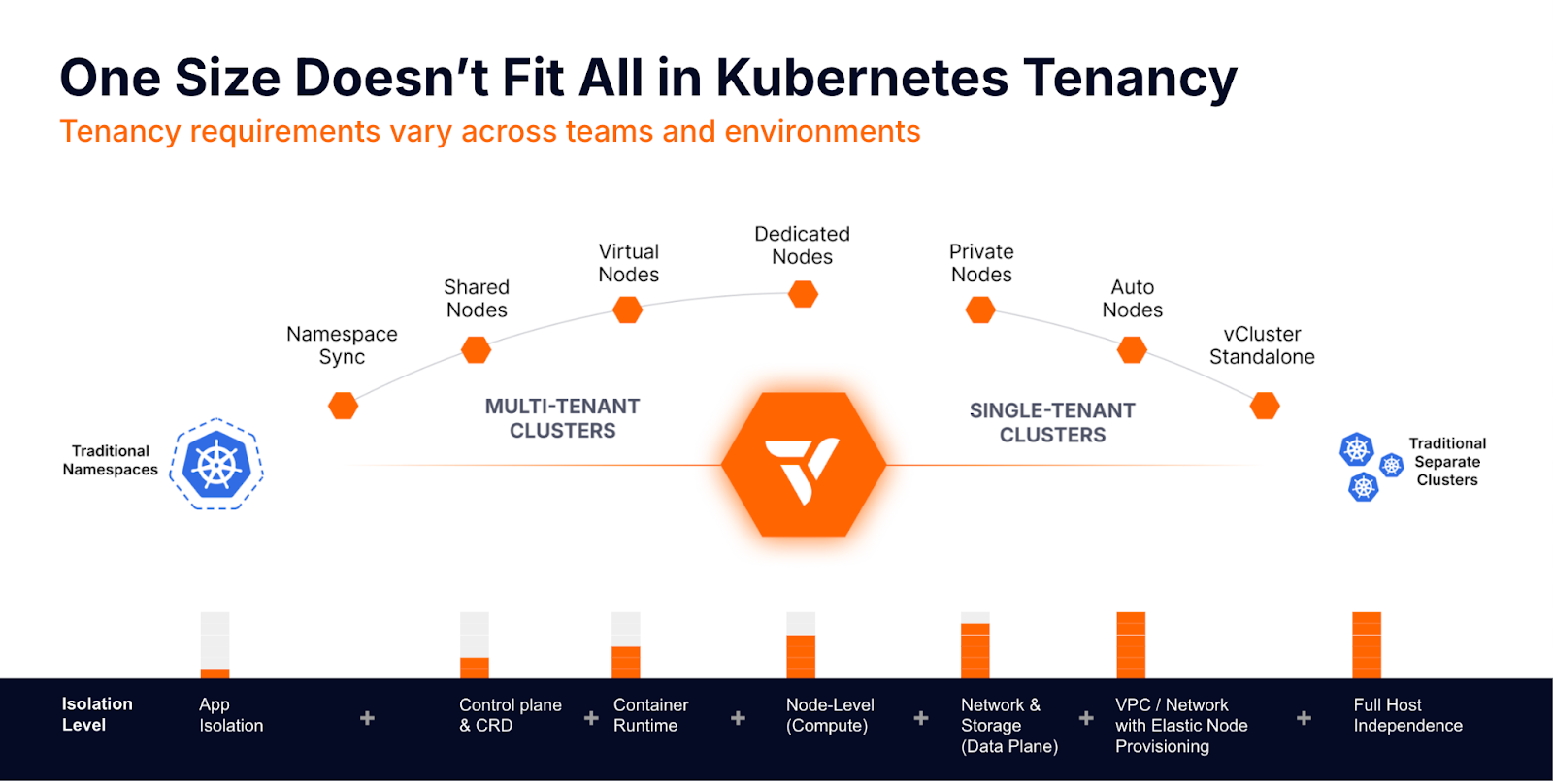

Instead of choosing between “shared but risky” and “isolated but expensive,” the tenancy layer allows platform teams to offer isolation as a spectrum, critical for AI and GPU use cases where requirements vary wildly.

In this post, I’ll explore why the tenancy layer matters, where it fits in a Kubernetes stack, and why it’s becoming essential for AI- and GPU-driven platforms in 2026.

Most organizations start Kubernetes with a single shared cluster. Over time, as more teams adopt it, familiar challenges emerge:

GPU-backed workloads increase the blast radius of misconfiguration, raise the cost of mistakes, and demand stronger isolation boundaries. At the same time, platform teams are under pressure to provide self-service environments without becoming bottlenecks or cluster provisioning factories.

A tenancy layer solves these problems by becoming the boundary where:

With vCluster, each virtual cluster includes its own Kubernetes API server and control plane components. This delivers real control-plane isolation while still sharing host cluster compute when appropriate—allowing platforms to move beyond namespace-only multi-tenancy toward a model that feels like “a cluster per team,” without the cost or complexity.

The tenancy layer provides the critical missing middle ground in a modern Kubernetes platform.

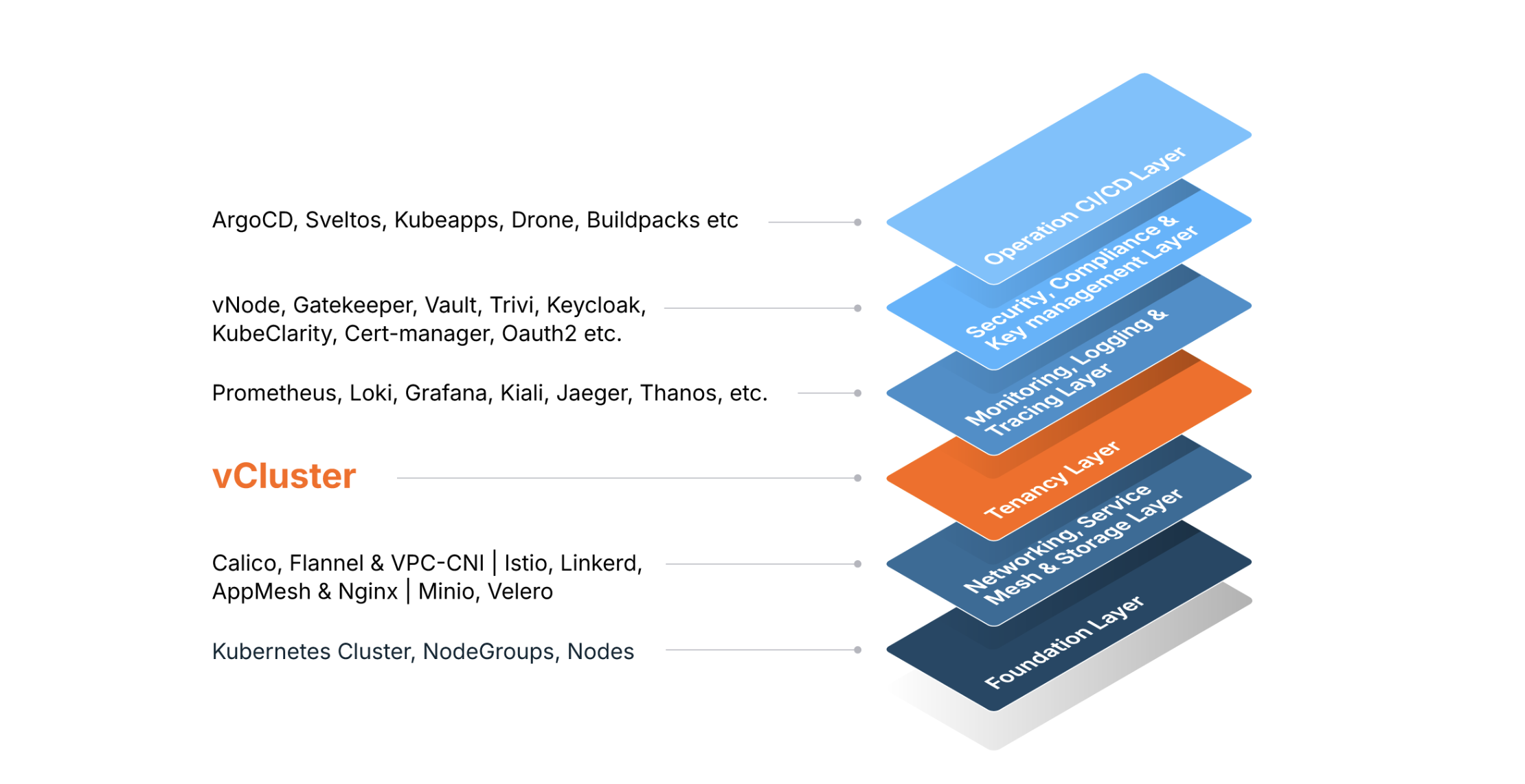

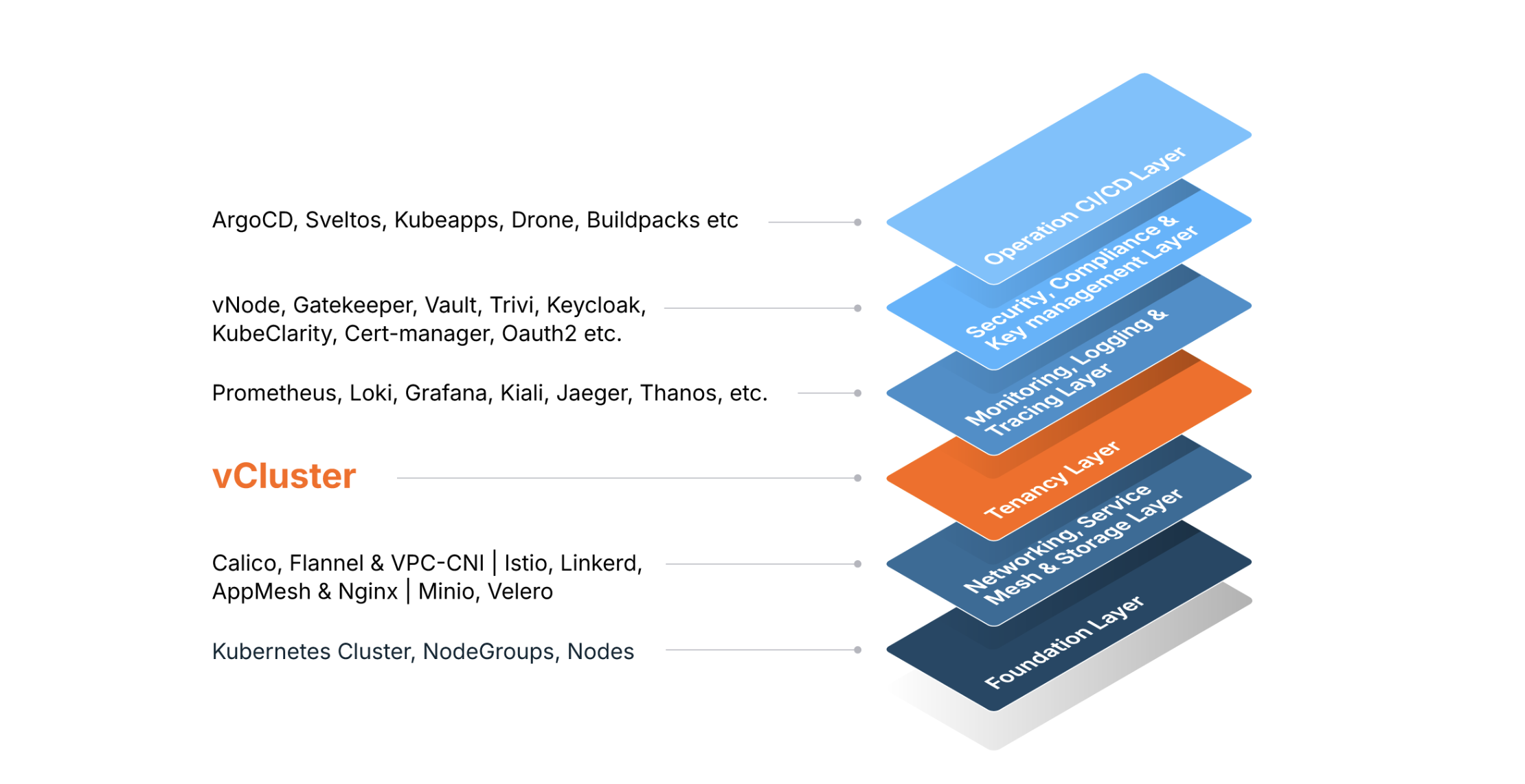

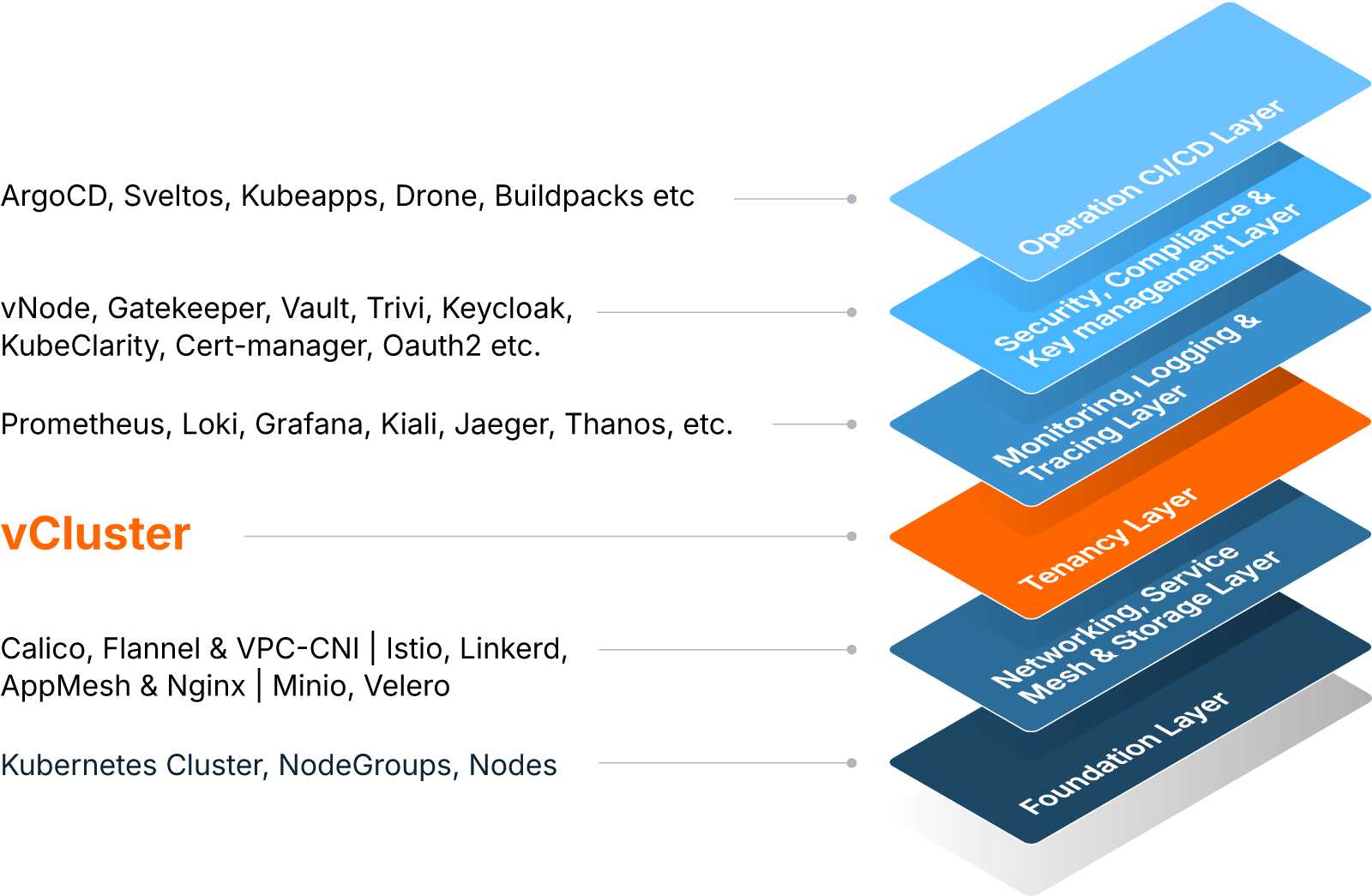

A typical platform stack has five core layers:

The Tenancy Layer sits neatly above Networking/Storage and below Observability/Security/Operations.

This placement matters.

Virtual clusters consume the underlying networking and storage infrastructure, but they fundamentally change how the layers above behave. Observability, security controls, and operational tooling can now be applied per tenant, inside a virtual cluster, while still being governed by global guardrails at the host-cluster level.

For AI platforms, this is especially important: different teams may need different logging pipelines, admission controls, or scheduling policies—without impacting others.

Virtual clusters still rely on the host cluster’s CNI, CSI, service networking, and PV infrastructure unless configured with private nodes that run separate CNIs/CSIs.

Each virtual cluster may run its own Prometheus instance, policy engines, or admission controllers—critical for isolating AI experimentation and compliance-sensitive workloads.

Backup and restore, upgrades, auto-scaling, and cost optimization are all more effective when applied at the virtual cluster level instead of globally.

vCluster provides multiple tenancy models from lightweight shared tenancy to hardened cluster-like isolation. This makes it uniquely suited to act as a first-class layer in your stack.

Key capabilities include:

Each virtual cluster has its own API server and control plane processes, giving tenants autonomy without giving them access to host cluster internals.

Depending on cost, security, and performance needs, teams can choose:

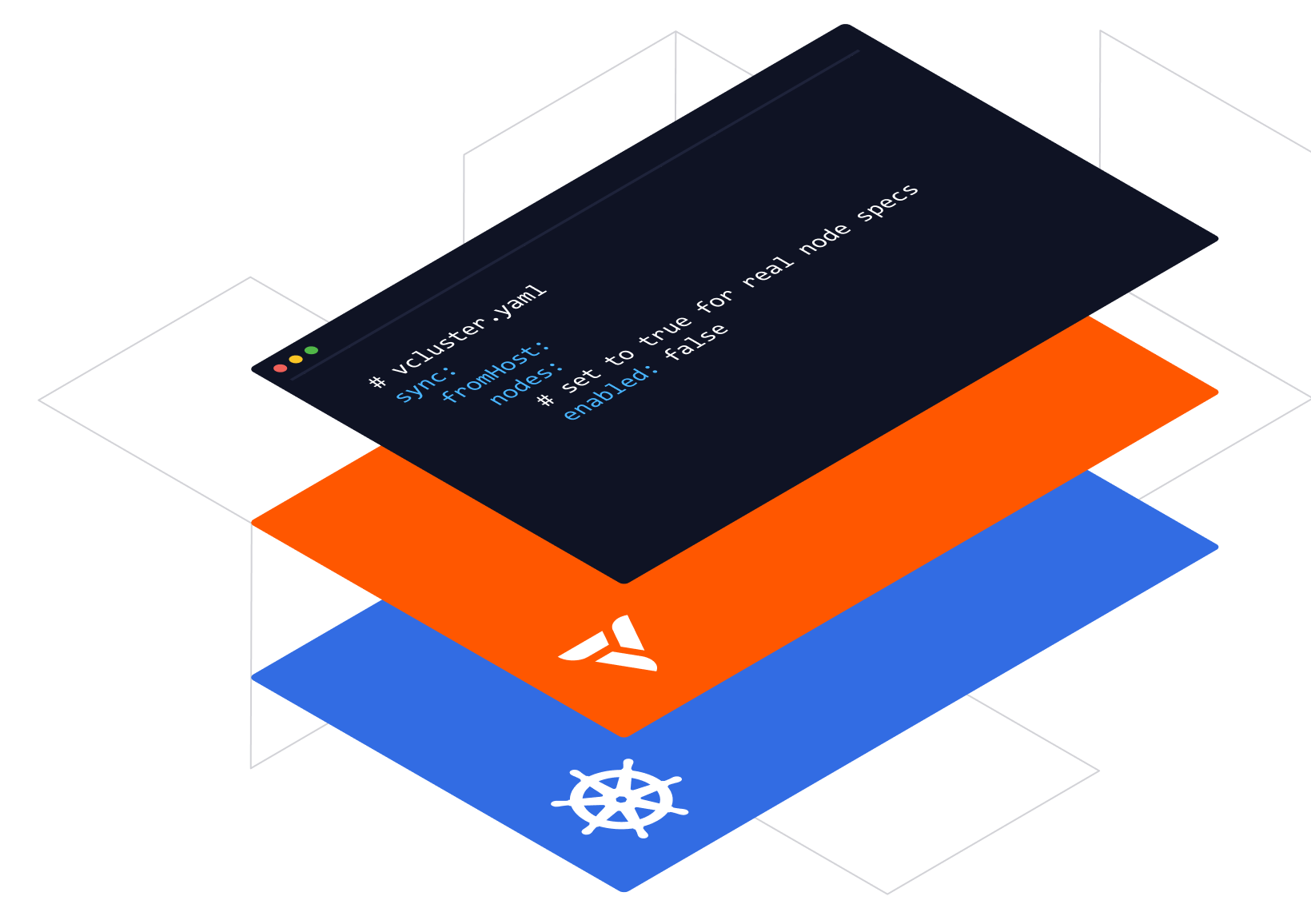

Virtual clusters are defined declaratively in vcluster.yaml, allowing platform teams to manage environments through GitOps workflows just like any other infrastructure.

Features like Sleep Mode, Auto Nodes, Private Nodes, embedded etcd, and external database connectors reduce costs and operational overhead while also enabling elastic scaling, stronger isolation, simpler lifecycle management, and secure self-service Kubernetes at scale.

vCluster integrates with:

This ensures that the tenancy layer cooperates smoothly with your upper layers like security and monitoring.

Adding a tenancy layer using vCluster is one of the highest-leverage improvements you can make to your Kubernetes platform. It provides the missing middle ground between simple namespace isolation and the complexity of managing dozens or hundreds of real clusters.

By placing vCluster between Networking/Storage and your Observability/Security layers, your platform gains:

This matters enormously for AI platforms:

With newer models like Private Nodes and Auto Nodes, GPU-backed virtual clusters can even behave like fully dedicated clusters—while still benefiting from centralized lifecycle management and automation.

In other words, the tenancy layer allows Kubernetes platforms to scale AI experimentation and production workloads without scaling operational chaos.

As AI workloads and GPU infrastructure become first-class citizens in Kubernetes, the ability to provide isolated, ephemeral, and elastic environments will define successful platform teams.

In 2026, the question won’t be:

“Should we add a tenancy layer?”

It will be:

“How did we ever run Kubernetes platforms without one?”

Deploy your first virtual cluster today.