1. Executive Summary: Why Neocloud Providers Choose vCluster

Neocloud providers, companies building modern GPU accelerated infrastructure for AI workloads, are rapidly discovering that customers expect far more than raw compute. AI engineering teams want an experience similar to leading hyperscalers: Kubernetes clusters they can control, predictable performance, secure isolation from other tenants, and compatibility with the full modern ML ecosystem.

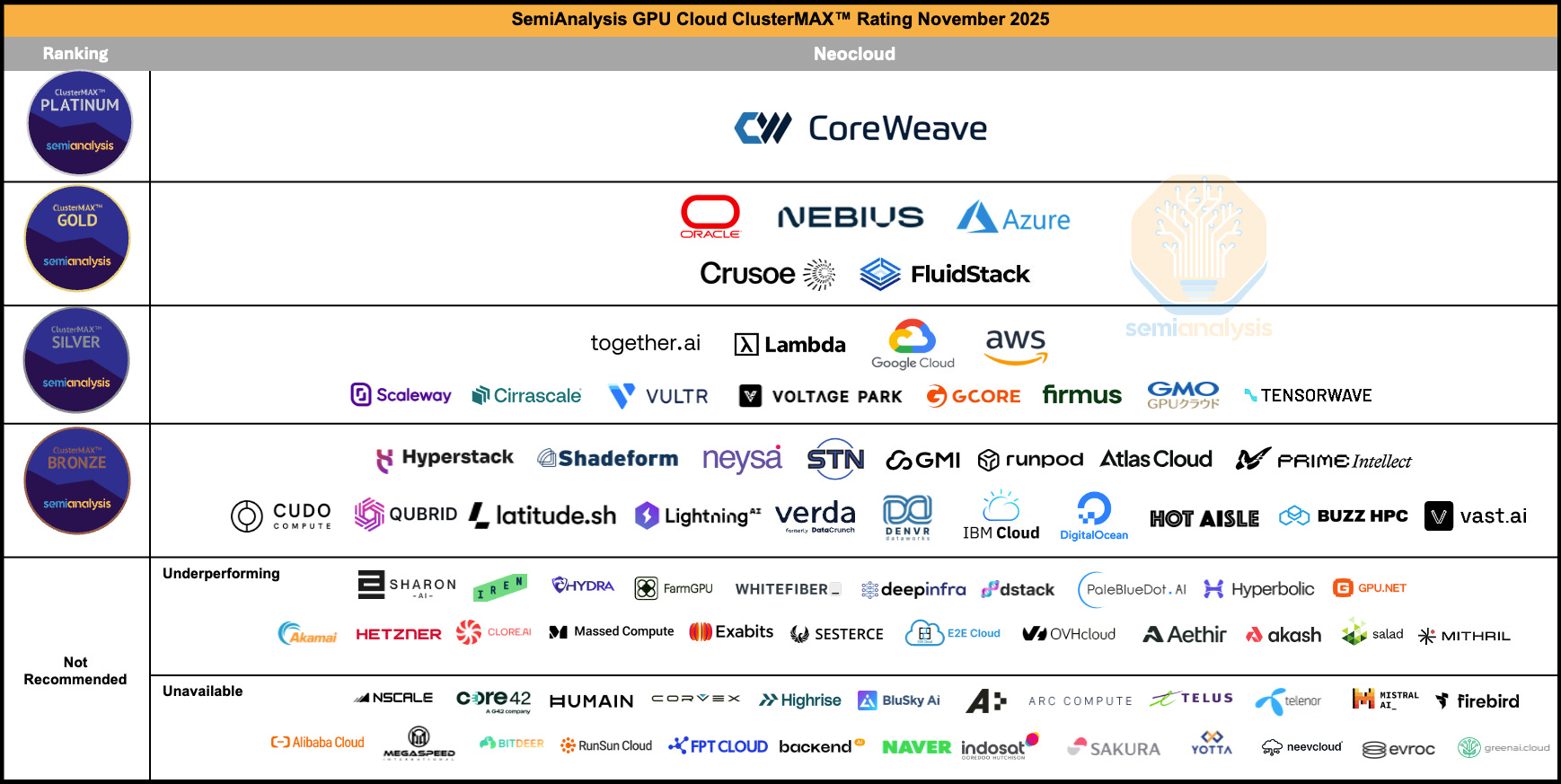

The ClusterMAX Kubernetes Expectations framework captures these requirements clearly. It defines the capabilities that enterprises assume a GPU cloud should provide, including reliable Kubernetes access, strong isolation, GPU operator compatibility, NCCL performance, distributed training support, and cloud grade observability.

Building a managed Kubernetes offering that meets these expectations traditionally requires deep engineering investment. Most providers end up deploying a separate physical cluster per customer, a pattern that is slow, expensive, and operationally complex.

vCluster provides a more efficient path.

By virtualizing the Kubernetes control plane, vCluster allows Neocloud providers to give every customer a dedicated Kubernetes cluster that behaves exactly like a cloud managed offering while running on a shared, high performance GPU fleet.

The result is a Kubernetes experience that aligns with ClusterMAX expectations, supports modern AI workloads, and avoids the cost and complexity of operating dozens of physical clusters.

2. What Is ClusterMAX and Why It Matters

In the ClusterMAX framework, these modern cloud infrastructure providers are referred to as Neoclouds, a broad category that includes both established GPU cloud providers and emerging platforms aiming to deliver higher level services such as managed Kubernetes.

ClusterMAX, created by SemiAnalysis, is becoming a standard reference for comparing GPU cloud providers. It evaluates many aspects of cloud infrastructure, including accelerators, networking, and data center design, but its Kubernetes category has become especially important because Kubernetes is the default interface for AI training, fine tuning, and inference workloads.

When evaluating a new GPU cloud, enterprise customers frequently ask:

- Can I get a secure, isolated Kubernetes cluster?

- Does your cluster support GPU Operator, NCCL, and distributed training?

- Is storage reliable and compatible with my workloads?

- Will my ML jobs behave the same way they do on EKS or GKE?

ClusterMAX provides a consistent way to answer these questions. Providers who align with its Kubernetes expectations demonstrate enterprise grade readiness, GPU aware Kubernetes, AI workload compatibility, and operational maturity.

vCluster enables Neocloud providers to meet these expectations quickly without managing a large fleet of physical clusters.

3. How vCluster Enables Managed Kubernetes Offerings for GPU Cloud Providers

Neocloud providers need a Kubernetes experience that behaves like EKS, GKE, or AKS but is optimized for high performance GPU workloads. vCluster enables this through three architectural pillars.

3.1 Fully Isolated Kubernetes Clusters for Every Customer

Every vCluster is a dedicated Kubernetes control plane with:

- Its own API server

- Its own RBAC and authentication

- Its own CRDs, operators, and controllers

- Its own upgrade lifecycle

This gives each tenant the autonomy and predictability of a physical cluster without forcing providers to operate one actual cluster per customer. Control plane isolation is central to ClusterMAX’s multi tenancy expectations and is essential for AI and ML teams migrating from hyperscalers.

3.2 Native Integration With GPU Infrastructure and AI Workloads

vCluster virtualizes only the control plane. Workloads run directly on the provider’s GPU nodes, which means:

- GPU Operator installs and functions as expected

- CUDA, NCCL, MIG, and GPU topology behave normally

- HPC networking and Network Operator work correctly when using Private Nodes

- Distributed AI workloads like MPI, TorchElastic, Ray, and JAX perform as they would on a native cluster

- No overhead is added to GPU scheduling or GPU data paths

Compatibility with the GPU stack is essential for meeting the GPU specific criteria in ClusterMAX.

3.3 Flexible Data Plane Isolation: Private Nodes, Auto Nodes, and Standalone Clusters

GPU workloads require careful isolation. vCluster provides three models that allow providers to offer strong multi tenancy without recommending shared nodes for GPU use.

Private Nodes

A tenant’s vCluster runs on exclusive GPU nodes. Ideal for:

- Enterprise AI workloads

- Multi node distributed training

- Customers requiring predictable performance or isolation guarantees

Auto Nodes

Nodes are assigned to vClusters from a shared GPU pool as needed. Ideal for:

- Startups and research workloads

- Elastic GPU consumption

- Lower cost Kubernetes tiers with real isolation

vCluster Standalone

A fully independent Kubernetes cluster for the tenant, both control plane and data plane, managed with the same automation.

Ideal for regulated enterprises or customers who need absolute separation.

These models enable providers to deliver an EKS like managed Kubernetes product optimized for GPU economics.

4. Full Mapping Table: ClusterMAX Criteria and How vCluster Meets Them

Core Kubernetes Configuration Requirements

Storage and Networking

GPU and Compute Infrastructure

AI and ML Workload Support

GPU Specific Kubernetes Features

Networking, Ingress, and Traffic Management

Integration Requirements

Standard Installation Components

Support and Operations

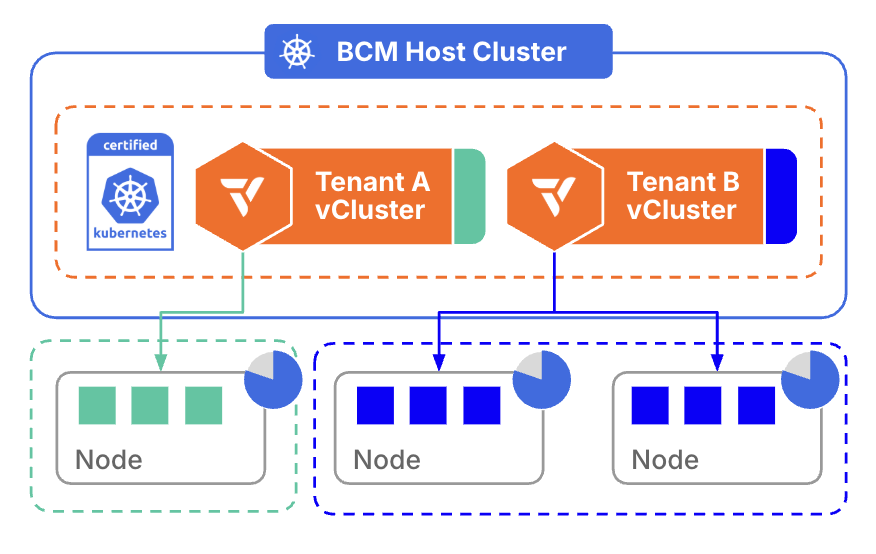

5. Architecture Overview: How vCluster Supports a Managed Service

A typical GPU cloud built on vCluster includes:

The Host GPU Cluster

High performance GPU nodes, HPC networking, CSI storage, observability stacks, and node lifecycle automation.

vCluster Platform

Multi tenancy management, templates, RBAC and SSO integration, lifecycle automation, upgrades, and provisioning logic.

Tenant vClusters

Each customer receives their own isolated Kubernetes control plane with the ability to install operators and tools without affecting others.

This architecture combines isolation, efficiency, and performance consistency into a unified managed Kubernetes experience.

6. Benefits for Neocloud Providers

Delivering a managed Kubernetes service is essential for Neoclouds that want to attract enterprise AI workloads or improve their position in the ClusterMAX rankings. vCluster provides the missing control plane and multi tenancy layer that allows providers to differentiate on platform capability rather than simply hardware availability. By virtualizing the Kubernetes control plane, vCluster enables Neoclouds to move up the value chain, strengthen their competitive position, and deliver a more complete cloud experience to customers.

6.1 Faster Time to Market

Neoclouds can introduce a fully managed Kubernetes offering in less time than it would take to build one internally.

- vCluster removes the need to develop complex control plane automation and multi tenant logic.

- Providers deliver an EKS like cluster experience without hyperscaler scale or engineering resources.

This accelerates platform expansion and shortens the path to new customer revenue.

Below are the key advantages that vCluster unlocks for Neocloud providers.

6.2 Strong Isolation Without Extra Clusters

Control plane isolation gives each customer a dedicated Kubernetes environment while avoiding cluster sprawl.

- Tenants experience a real cluster, not a namespace partition.

- Private Nodes and Auto Nodes provide data plane isolation without deploying a new physical cluster.

This keeps operations simpler and more cost efficient.

6.3 AI Ready Kubernetes

For AI teams, predictability and compatibility matter as much as performance.

- GPU Operator, NCCL, MIG, and distributed training work natively inside vClusters.

- Customers migrate workloads from EKS, GKE, or on premises environments with no refactoring.

This improves adoption and reduces migration friction.

6.4 Operational Efficiency at Scale

vCluster centralizes Kubernetes complexity so providers can scale their services efficiently.

- Many vClusters can coexist on a single host cluster.

- Monitoring, upgrades, and policy enforcement remain unified.

- Providers avoid maintaining dozens or hundreds of standalone clusters.

This reduces infrastructure overhead and simplifies long term operations.

6.5 Better Alignment With ClusterMAX Expectations

ClusterMAX rewards Neoclouds that invest in their Kubernetes layer.

- vCluster satisfies multi tenancy, GPU readiness, observability, and operational requirements.

- Providers advance their ClusterMAX standing by delivering a modern managed Kubernetes offering.

This elevates platform credibility and increases customer trust.

7. Deliver the Managed Kubernetes Layer That Elevates Your ClusterMAX Rating

Many Neocloud providers find that their ClusterMAX ranking is limited not by hardware or networking, but by the absence of a true managed Kubernetes layer. ClusterMAX rewards platforms that provide isolated control planes, reliable Kubernetes behavior, GPU operator maturity, NCCL performance, and enterprise grade tenant boundaries.

vCluster gives providers a fast and proven way to deliver this layer.

By virtualizing the control plane, providers can offer each customer a fully isolated, upstream compatible Kubernetes cluster that supports GPU Operator, distributed AI workloads, and all the operational features that ClusterMAX evaluates. Instead of managing dozens of physical clusters, providers run a unified GPU fleet and deliver the experience customers expect from a modern cloud provider.

If your goal is to rise in the ClusterMAX rankings, this is the most impactful advancement you can add to your platform.

See how vCluster can elevate your Kubernetes offering and strengthen your ClusterMAX position.

Deploy your first virtual cluster today.